Logging and auditing for Cloud Paks🔗

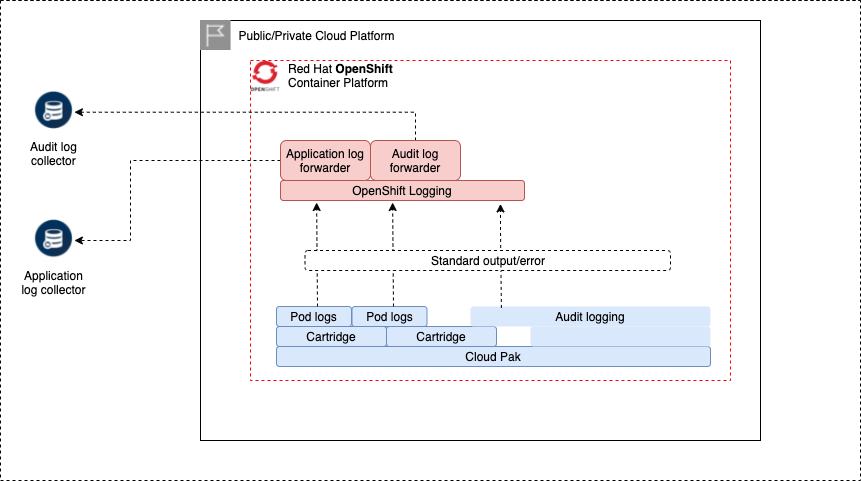

For logging and auditing of Cloud Pak for Data we make use of the OpenShift logging framework, which delivers a lot of flexibility in capturing logs from applications, storing them in an ElasticSearch datastore in the cluster (currently not supported by the deployer), or forwarding the log entries to external log collectors such as an ElasticSearch, Fluentd, Loki and others.

OpenShift logging captures 3 types of logging entries from workload that is running on the cluster:

- infrastructure - logs generated by OpenShift processes

- audit - audit logs generated by applications as well as OpenShift

- application - all other applications on the cluster

Logging configuration - openshift_logging🔗

Defines how OpenShift forwards the logs to external log collectors. Currently, the following log collector types are supported:

- loki

When OpenShift logging is activated via the openshift_logging object, all 3 logging types are activated automatically. You can specify logging_output items to forward log records to the log collector of your choice. In the below example, the application logs are forwarded to a loki server https://loki-application.sample.com and audit logs to https://loki-audit.sample.com, both have the same certificate to connect with:

openshift_logging:

- openshift_cluster_name: pluto-01

configure_es_log_store: False

cluster_wide_logging:

- input: application

logging_name: loki-application

- input: infrastructure

logging_name: loki-application

- input: audit

logging_name: loki-audit

logging_output:

- name: loki-application

type: loki

url: https://loki-application.sample.com

certificates:

cert: pluto-01-loki-cert

key: pluto-01-loki-key

ca: pluto-01-loki-ca

- name: loki-audit

type: loki

url: https://loki-audit.sample.com

certificates:

cert: pluto-01-loki-cert

key: pluto-01-loki-key

ca: pluto-01-loki-ca

Cloud Pak for Data and Foundational Services application logs are automatically picked up and forwarded to the loki-application logging destination and no additional configuration is needed.

Property explanation🔗

| Property | Description | Mandatory | Allowed values |

|---|---|---|---|

| openshift_cluster_name | Name of the OpenShift cluster to configure the logging for | Yes | |

| configure_es_log_store | Must internal ElasticSearch log store and Kibana be provisioned? (default False) | No | True, False (default) |

| cluster_wide_logging | Defines which classes of log records will be sent to the log collectors | No | |

| cluster_wide_logging.input | Specifies OpenShift log records class to forwawrd | Yes | application, infrastructure, audit |

| cluster_wide_logging.logging_name | Specifies the logging_output to send the records to . If not specified, records will be sent to the internal log only | No | |

| cluster_wide_logging.labels | Specify your own labels to be added to the log records. Every logging input/output combination can have its own labes | No | |

| logging_output | Defines the log collectors. If configure_es_log_store is True, output will always be sent to the internal ES log store | No | |

| logging_output.name | Log collector name, referenced by cluster_wide_logging or cp4d_audit | Yes | |

| logging_output.type | Type of the log collector, currently only loki is possible | Yes | loki |

| logging_output.url | URL of the log collector; this URL must be reachable from within the cluster | Yes | |

| logging_output.certificates | Defines the vault secrets that hold the certificate elements | Yes, if url is https | |

| logging_output.certificates.cert | Public certificate to connect to the URL | Yes | |

| logging_output.certificates.key | Private key to connect to the URL | Yes | |

| logging_output.certificates.ca | Certificate Authority bundle to connect to the URL | Yes |

If you also want to activate audit logging for Cloud Pak for Data, you can do this by adding a cp4d_audit_config object to your configuration. With the below example, the Cloud Pak for Data audit logger is configured to write log records to the standard output (stdout) of the pods, after which they are forwarded to the loki-audit logging destination by a ClusterLogForwarder custom resource. Optionally labels can be specified which are added to the ClusterLogForwarder custom resource pipeline entry.

cp4d_audit_config:

- project: cpd

audit_replicas: 2

audit_output:

- type: openshift-logging

logging_name: loki-audit

labels:

cluster_name: "{{ env_id }}"

Info

Because audit log entries are written to the standard output, they will also be picked up by the generic application log forwarder and will therefore also appear in the application logging destination.

Cloud Pak for Data audit configuration🔗

IBM Cloud Pak for Data has a centralized auditing component for base platform and services auditable events. Audit events include login and logout to the platform, creation and deletion of connections and many more. Services that support auditing are documented here: https://www.ibm.com/docs/en/cloud-paks/cp-data/4.0?topic=data-services-that-support-audit-logging

The Cloud Pak Deployer simplifies the recording of audit log entries by means of the OpenShift logging framework, which can in turn be configured to forward entries to various log collectors such as Fluentd, Loki and ElasticSearch.

Audit configuration - cp4d_audit_config🔗

A cp4d_audit_config entry defines the audit configuration for a Cloud Pak for Data instance (OpenShift project). The main configuration items are the number of replicas and the output. Currently only one output type is supported: openshift-logging, which allows the OpenShift logging framework to pick up audit entries and forward to the designated collectors.

When a cp4d_audit_config entry exists for a certain cp4d project, the zen-audit-config ConfigMap is updated and then the audit logging deployment is restarted. If no configuration changes have been made, no restart is done.

Additionally, for the audit_output entries, the OpenShift logging ClusterLogForwarder instance is updated to forward audit entries to the designated logging output. In the example below the auditing is configured with 2 replicas and an input and pipeline is added to the ClusterLogForwarder instance so output to the matching channel defined in openshift_logging.logging_output.

cp4d_audit_config:

- project: cpd

audit_replicas: 2

audit_output:

- type: openshift-logging

logging_name: loki-audit

labels:

cluster_name: "{{ env_id }}"

Property explanation🔗

| Property | Description | Mandatory | Allowed values |

|---|---|---|---|

| project | Name of OpenShift project of the matching cp4d entry. The cp4d project must exist. | Yes | |

| audit_replicas | Number of replicas for the Cloud Pak for Data audit logger. | No (default 1) | |

| audit_output | Defines where the audit logs should be written to | Yes | |

| audit_output.type | Type of auditing output, defines where audit logging entries will be written | Yes | openshift-logging |

| audit_output.logging_name | Name of the logging_output entry in the openshift_logging object. This logging_output entry must exist. | Yes | |

| audit_output.labels | Optional list of labels set to the ClusterLogForwarder custom resource pipeline | No |