Apache Kafka can handle any data, but it does not validate the information in the messages. However, efficient handling of data often requires that it includes specific information in a certain format. Using schemas, you can define the structure of the data in a message, ensuring that both producers and consumers use the correct structure.

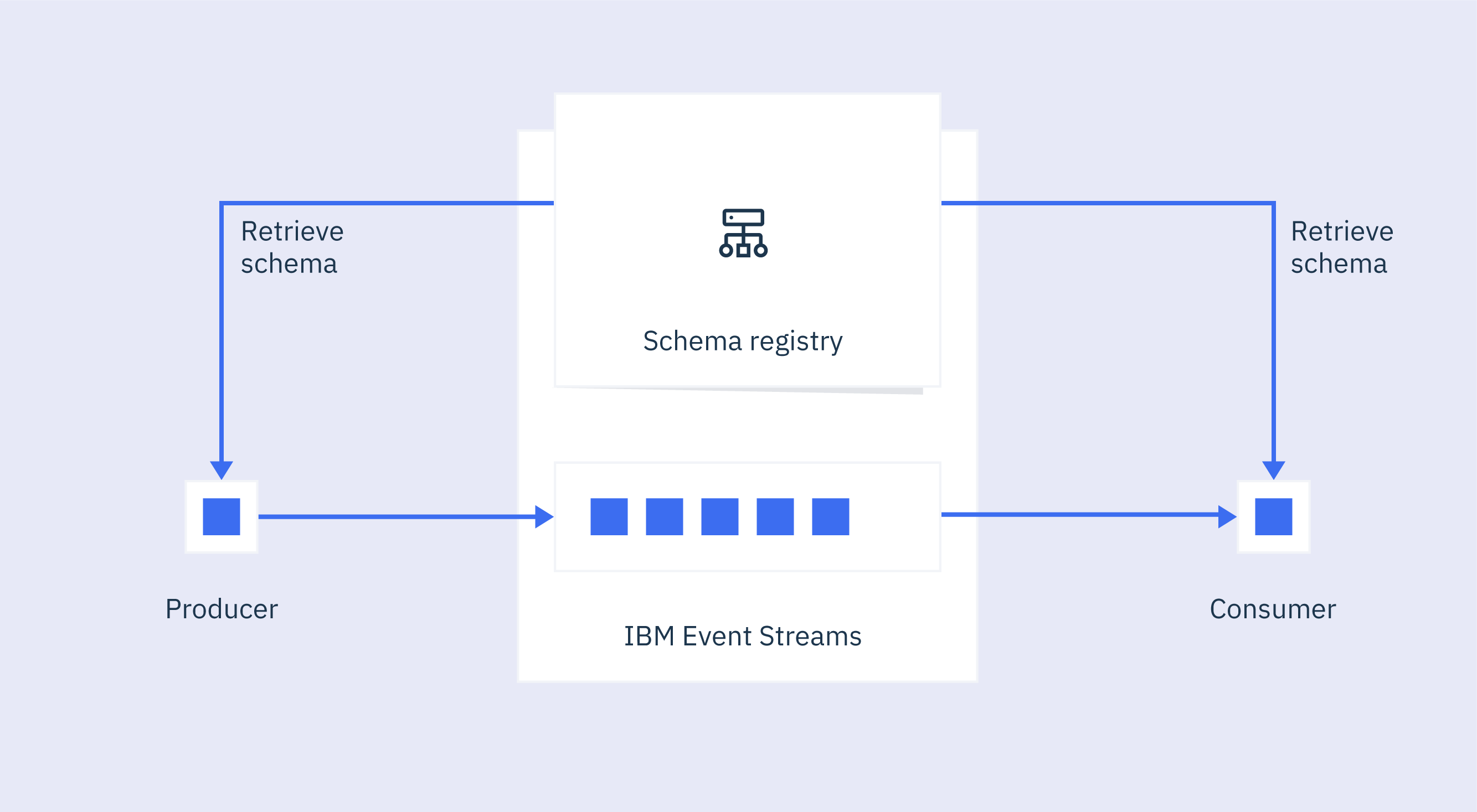

Schemas help producers create data that conforms to a predefined structure, defining the fields that need to be present together with the type of each field. This definition then helps consumers parse that data and interpret it correctly. Event Streams supports schemas and includes a schema registry for using and managing schemas.

It is common for all of the messages on a topic to use the same schema. The key and value of a message can each be described by a schema.

Schema registry

Schemas are stored in internal Kafka topics by the Apicurio Registry, an open-source schema registry. In addition to storing a versioned history of schemas, Apicurio Registry provides an interface for retrieving them. Each Event Streams cluster has its own instance of Apicurio Registry providing schema registry functionality.

Your producers and consumers validate the data against the specified schema stored in the schema registry. This is in addition to going through Kafka brokers. The schemas do not need to be transferred in the messages this way, meaning the messages are smaller than without using a schema registry.

If you are migrating to use Event Streams as your Kafka solution, and have been using a schema registry from a different provider, you can migrate to using Event Streams and the Apicurio Registry.

The Event Streams schema registry provided in earlier versions is deprecated in version 10.1.0 and later. If you are upgrading to Event Streams version 10.1.0 from an earlier version, you can migrate to the Apicurio Registry from the deprecated schema registry.

Apache Avro data format

Schemas are defined using Apache Avro, an open-source data serialization technology commonly used with Apache Kafka. It provides an efficient data encoding format, either by using the compact binary format or a more verbose, but human-readable JSON format.

The schema registry in Event Streams uses Apache Avro data formats. When messages are sent in the Avro format, they contain the data and the unique identifier for the schema used. The identifier specifies which schema in the registry is to be used for the message.

Avro has support for a wide range of data types, including primitive types (null, boolean, integer, long, float, double, bytes, and string) and complex types (record, enum, array, map, union, and fixed).

Learn more about how you can create schemas in Event Streams.

Serialization and deserialization

A producing application uses a serializer to produce messages conforming to a specific schema. As mentioned earlier, the message contains the data in Avro format, together with the schema identifier.

A consuming application then uses a deserializer to consume messages that have been serialized using the same schema. When a consumer reads a message sent in Avro format, the deserializer finds the identifier of the schema in the message, and retrieves the schema from the schema registry to deserialize the data.

This process provides an efficient way of ensuring that data in messages conform to the required structure.

Serializers and deserializers that automatically retrieve the schemas from the schema registry as required are provided by Event Streams. If you need to use schemas in an environment for which serializers or deserializers are not provided, you can use the command line or UI directly to retrieve the schemas.

Versions and compatibility

Whenever you add a schema, and any subsequent versions of the same schema, Apicurio Registry validates the format automatically and warns of any issues. You can evolve your schemas over time to accommodate changing requirements. You simply create a new version of an existing schema, and the registry ensures that the new version is compatible with the existing version, meaning that producers and consumers using the existing version are not broken by the new version.

When you create a new version of the schema, you simply add it to the registry and version it. You can then set your producers and consumers that use the schema to start using the new version. Until they do, both producers and consumers are warned that a new version of the schema is available.

Lifecycle

When a new version is used, you can deprecate the previous version. Deprecating means that producing and consuming applications still using the deprecated version are warned that a new version is available to upgrade to. When you upgrade your producers to use the new version, you can disable the older version so it can no longer be used, or you can remove it entirely from the schema registry.

You can use the Event Streams UI or CLI to manage the lifecycle of schemas, including registering, versioning, and deprecating.

How to get started with schemas

- Create schemas

- Add schemas to schema registry

- Set your Java or non-Java applications to use schemas

- Manage schema lifecycle