You can configure Event Streams to allow JMX scrapers to export Kafka broker JMX metrics to external applications. This tutorial details how to export Kafka JMX metrics as graphite output, and then use Logstash to write the metrics to an external Splunk system as an HTTP Event Collector.

Prerequisites

- Ensure you have an Event Streams installation available. This tutorial is based on Event Streams version 11.0.0.

- When installing Event Streams, ensure you configure your jmxtrans deployment as described in Configuring secure JMX connections.

- Ensure you have a Splunk Enterprise server installed or a Splunk Universal Forwarder that has network access to your cluster.

- Ensure that you have an index to receive the data and an HTTP Event Collector configured on Splunk. Details can be found in the Splunk documentation

- Ensure you have configured access to the Docker registry from the machine you will be using to deploy Logstash.

jmxtrans

jmxtrans is a connector that reads JMX metrics and outputs a number of formats supporting a wide variety of logging, monitoring, and graphing applications. To deploy to your Red Hat OpenShift Container Platform cluster, you must configure jmxtrans in you Event Streams CR.

Note: jmxtrans is not supported in Event Streams versions 11.2.0 and later. To deploy jmxtrans in Event Streams versions 11.2.0 and later, follow the instructions in the GitHub README.

Solution overview

The tasks in this tutorial help achieve the following:

- Set up splunk with HTTP Event Collector.

- Logstash packaged into a Docker image to load configuration values and connection information.

- Docker image pushed to the Red Hat OpenShift Container Platform cluster Docker registry into the namespace where Logstash will be deployed.

- Utilize the

Kafka.spec.JMXTransparameter to configure a jmxtrans deployment.

Configure Splunk

Tip: You can configure Splunk with the Splunk Operator for Kubernetes. This tutorial is based on the Splunk operator version 2.2.0.

With the HTTP Event Collector (HEC), you can send data and application events to a Splunk deployment over the HTTP and Secure HTTP (HTTPS) protocols. HEC uses a token-based authentication model. For more information about setting up the HTTP Event Collector, see the Splunk documentation.

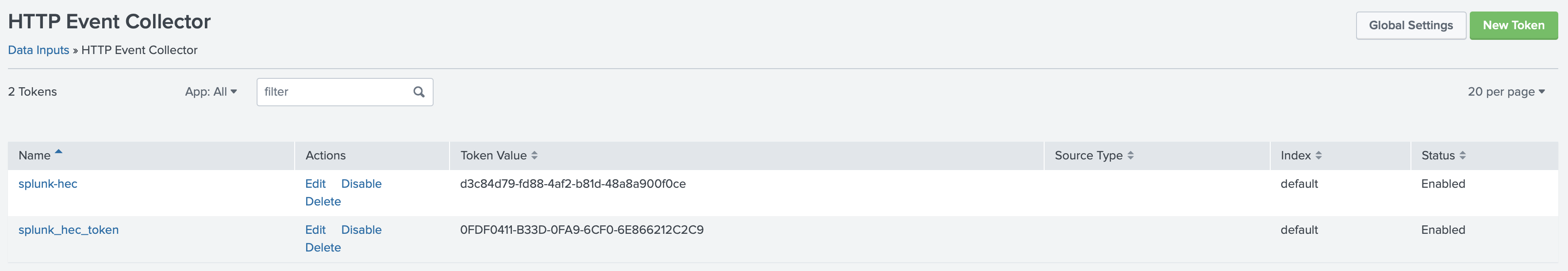

In this tutorial, we will be configuring the HTTP Event Collector by using Splunk Web as follows:

- In the Splunk Web click Settings > Add Data.

- Click Monitor.

- Click HTTP Event Collector.

- In the Name field, enter a name for the token, we’ll add the name

splunk-hecfor this demo. - Click Next.

- Click Review.

- Confirm that all settings for the endpoint are what you want.

- If all settings are what you want, click Submit. Otherwise, click < to make changes.

- Copy the token value that Splunk Web displays and you can then use the token to send data to HEC.

Your name for the token is included in the list of data input names for the HTTP Event Collector.

Configure and deploy Logstash

Example Dockerfile.logstash

Create a Dockerfile called Dockerfile.logstash as follows.

FROM docker.elastic.co/logstash/logstash:<required-logstash-version>

RUN /usr/share/logstash/bin/logstash-plugin install logstash-input-graphite

RUN rm -f /usr/share/logstash/pipeline/logstash.conf

COPY pipeline/ /usr/share/logstash/pipeline/

COPY config/ /usr/share/logstash/config/

Example logstash.yml

Create a Logstash settings file called logstash.yml as follows.

path.config: /usr/share/logstash/pipeline/

Example logstash.conf

Create a Logstash configuration file called logstash.conf as follows.

input {

graphite {

host => "localhost"

port => 9999

mode => "server"

}

}

output {

http {

http_method => "post"

url => "https://<splunk-host-name-or-ip-address>:<splunk-http-event-collector-port>/services/collector/event"

headers => ["Authorization", "Splunk <splunk-http-event-collector-token>"]

mapping => {"event" => "%{message}"}

ssl_verification_mode => "none" # To skip ssl verification

}

}

Building the Docker image

Build the Docker images as follows.

- Ensure that

Dockerfile.jmxtrans,Dockerfile.logstashandrun.share in the same directory. EditDockerfile.logstashand replace<required-logstash-version>with the Logstash version you would like to use as a basis. - Ensure that

logstash.ymlis in a subdirectory calledconfig/of the directory in step 1. - Ensure that

logstash.confis in a subdirectory calledpipeline/of the directory in step 1. - Edit

logstash.conf, and replace<splunk-host-name-or-ip-address>with the external Splunk Enterprise, Splunk Universal forwarder, or Splunk Cloud host name or IP address.

Replace<splunk-http-event-collector-port>with the HTTP Event Collector port number.

Replace<splunk-http-event-collector-token>with the HTTP Event Collector token setup on the Splunk HTTP Event Collector Data input. - Create the logstash image:

docker build -t <registry url>/logstash:<tag> -f Dockerfile.logstash . - Push the image to your cluster Docker registry:

docker push <registry url>/logstash:<tag>

Example Logstash deployment

The following is an example of a deployment YAML file that sets up a Logstash instance.

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash

namespace: es

spec:

selector:

matchLabels:

app: logstash

replicas: 1

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: >-

<registry url>/logstash:<tag>

ports:

- containerPort: 9999

Example Logstash service configuration

Add a service that adds discovery and routing to the the newly formed pods after creating the Logstash instance. The following is an example of a service that uses a selector for a Logstash pod. In this example, 9999 is the port configured in logstash.conf we created earlier.

apiVersion: v1

kind: Service

metadata:

name: <logstash-service-name>

namespace: es

labels:

app.kubernetes.io/name: logstash

spec:

selector:

app: logstash

labels:

ports:

- protocol: TCP

port: 9999

targetPort: 9999

Configure JMX for Event Streams

To expose the JMX port within the cluster, set the spec.strimziOverrides.kafka.jmxOptions value to {} and enable jmxtrans.

For example:

apiVersion: eventstreams.ibm.com/v1beta2

kind: EventStreams

# ...

spec:

# ...

strimziOverrides:

# ...

kafka:

jmxOptions: {}

Tip: The JMX port can be password-protected to prevent unauthorized pods from accessing it. For more information, see Configuring secure JMX connections.

The following example shows how to configure a jmxtrans deployment for Event Streams versions earlier than 11.2.0. If you are running Event Streams versions 11.2.0 and later, follow the instructions in the GitHub README to configure a jmxtrans deployment.

# ...

spec:

# ...

strimziOverrides:

# ...

jmxtrans:

#...

kafkaQueries:

- targetMBean: "kafka.server:type=BrokerTopicMetrics,name=*"

attributes: ["Count"]

outputs: ["standardOut", "logstash"]

outputDefinitions:

- outputType: "com.googlecode.jmxtrans.model.output.StdOutWriter"

name: "standardOut"

- outputType: "com.googlecode.jmxtrans.model.output.GraphiteWriterFactory"

host: "<logstash-service-name>.<namespace>.svc"

port: 9999

flushDelayInSeconds: 5

name: "logstash"

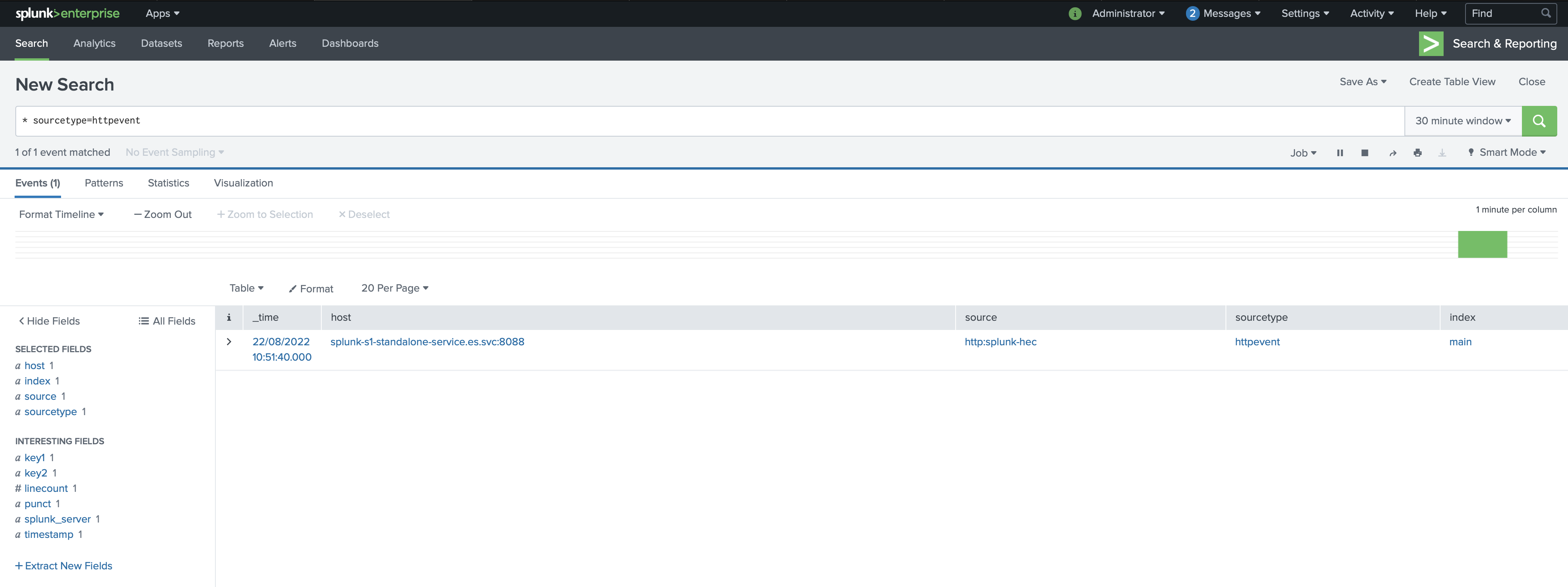

Events start appearing in Splunk after we apply the jmxTrans option in the custom resource. The time it takes for events to appear in the Splunk index is determined by the scrape interval on jmxtrans and the size of the receive queue on Splunk.

Troubleshooting

-

If metrics are not appearing in your external Splunk, run the following command to examine the logs for jmxtrans:

kubectl -n <target-namespace> get logs <jmxtrans-pod-name> -

You can change the log level for jmxtrans by setting the required granularity value in

spec.strimziOverrides.jmxTrans.logLevel. For example:# ... spec: # ... strimziOverrides: # ... jmxTrans: #... logLevel: debug -

To check the logs from the Splunk pod, you can view the

splunkd.logfile as follows:tail -f $SPLUNK_HOME/var/log/splunk/splunkd.log -

If the Splunk Operator installation fails due to error Bundle extract size limit, install the Splunk Operator on Red Hat OpenShift Container Platform 4.9 or later.

-

If you require additional logs and

stdoutfrom Logstash, edit thelogstash.conffile and add thestdoutoutput. You can also modifylogstash.ymlto boost the log level.Example

logstash.conffile:input { http { # input plugin for HTTP and HTTPS traffic port => 5044 # port for incoming requests ssl => false # HTTPS traffic processing } graphite { host => "0.0.0.0" port => 9999 mode => "server" } } output { http { http_method => "post" url => "https://<splunk-host-name-or-ip-address>:<splunk-http-event-collector-port>/services/collector/raw" headers => ["Authorization", "Splunk <splunk-http-event-collector-token>"] format => "json" ssl_verification_mode => "none" } stdout {} }Example

logstash.ymlfile:path.config: /usr/share/logstash/pipeline/ log.level: trace