|

MachineIntelligenceCore:ReinforcementLearning

|

|

MachineIntelligenceCore:ReinforcementLearning

|

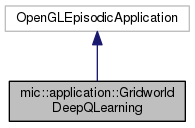

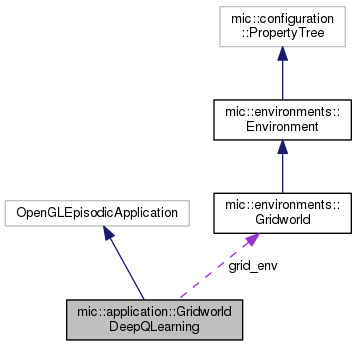

Class responsible for solving the gridworld problem with Q-learning and (not that) deep neural networks. More...

#include <GridworldDeepQLearning.hpp>

Public Member Functions | |

| GridworldDeepQLearning (std::string node_name_="application") | |

| virtual | ~GridworldDeepQLearning () |

Protected Member Functions | |

| virtual void | initialize (int argc, char *argv[]) |

| virtual void | initializePropertyDependentVariables () |

| virtual bool | performSingleStep () |

| virtual void | startNewEpisode () |

| virtual void | finishCurrentEpisode () |

Private Member Functions | |

| float | computeBestValueForCurrentState () |

| mic::types::MatrixXfPtr | getPredictedRewardsForCurrentState () |

| mic::types::NESWAction | selectBestActionForCurrentState () |

| std::string | streamNetworkResponseTable () |

Private Attributes | |

| WindowCollectorChart< float > * | w_chart |

| Window for displaying statistics. More... | |

| mic::utils::DataCollectorPtr < std::string, float > | collector_ptr |

| Data collector. More... | |

| mic::environments::Gridworld | grid_env |

| The gridworld environment. More... | |

| mic::configuration::Property < float > | step_reward |

| mic::configuration::Property < float > | discount_rate |

| mic::configuration::Property < float > | learning_rate |

| mic::configuration::Property < double > | epsilon |

| mic::configuration::Property < std::string > | statistics_filename |

| Property: name of the file to which the statistics will be exported. More... | |

| mic::configuration::Property < std::string > | mlnn_filename |

| Property: name of the file to which the neural network will be serialized (or deserialized from). More... | |

| mic::configuration::Property < bool > | mlnn_save |

| Property: flad denoting thether the nn should be saved to a file (after every episode end). More... | |

| mic::configuration::Property < bool > | mlnn_load |

| Property: flad denoting thether the nn should be loaded from a file (at the initialization of the task). More... | |

| BackpropagationNeuralNetwork < float > | neural_net |

| Multi-layer neural network used for approximation of the Qstate rewards. More... | |

| mic::types::Position2D | player_pos_t_minus_prim |

| long long | sum_of_iterations |

| long long | sum_of_rewards |

Class responsible for solving the gridworld problem with Q-learning and (not that) deep neural networks.

Definition at line 48 of file GridworldDeepQLearning.hpp.

| mic::application::GridworldDeepQLearning::GridworldDeepQLearning | ( | std::string | node_name_ = "application" | ) |

Default Constructor. Sets the application/node name, default values of variables, initializes classifier etc.

| node_name_ | Name of the application/node (in configuration file). |

Definition at line 39 of file GridworldDeepQLearning.cpp.

References discount_rate, epsilon, learning_rate, mlnn_filename, mlnn_load, mlnn_save, statistics_filename, and step_reward.

|

virtual |

|

private |

Calculates the best value for the current state - by finding the action having the maximal expected value.

Definition at line 215 of file GridworldDeepQLearning.cpp.

References getPredictedRewardsForCurrentState(), grid_env, and mic::environments::Environment::isActionAllowed().

Referenced by performSingleStep().

|

protectedvirtual |

Method called when given episode ends (goal: export collected statistics to file etc.) - abstract, to be overridden.

Definition at line 128 of file GridworldDeepQLearning.cpp.

References collector_ptr, mic::environments::Gridworld::getAgentPosition(), mic::environments::Gridworld::getStateReward(), grid_env, mlnn_filename, mlnn_save, neural_net, statistics_filename, sum_of_iterations, and sum_of_rewards.

|

private |

Returns the predicted rewards for given state.

Definition at line 244 of file GridworldDeepQLearning.cpp.

References mic::environments::Gridworld::encodeAgentGrid(), grid_env, and neural_net.

Referenced by computeBestValueForCurrentState(), performSingleStep(), and selectBestActionForCurrentState().

|

protectedvirtual |

Method initializes GLUT and OpenGL windows.

| argc | Number of application parameters. |

| argv | Array of application parameters. |

Definition at line 68 of file GridworldDeepQLearning.cpp.

References collector_ptr, sum_of_iterations, sum_of_rewards, and w_chart.

|

protectedvirtual |

Initializes all variables that are property-dependent.

Definition at line 88 of file GridworldDeepQLearning.cpp.

References mic::environments::Environment::getEnvironmentHeight(), mic::environments::Environment::getEnvironmentWidth(), grid_env, mic::environments::Gridworld::initializeEnvironment(), mlnn_filename, mlnn_load, and neural_net.

|

protectedvirtual |

Performs single step of computations.

Definition at line 287 of file GridworldDeepQLearning.cpp.

References computeBestValueForCurrentState(), discount_rate, mic::environments::Gridworld::encodeAgentGrid(), mic::environments::Gridworld::environmentToString(), epsilon, mic::environments::Gridworld::getAgentPosition(), getPredictedRewardsForCurrentState(), mic::environments::Gridworld::getStateReward(), grid_env, mic::environments::Gridworld::isStateTerminal(), learning_rate, mic::environments::Environment::moveAgent(), neural_net, player_pos_t_minus_prim, selectBestActionForCurrentState(), step_reward, and streamNetworkResponseTable().

|

private |

Finds the best action for the current state.

Definition at line 254 of file GridworldDeepQLearning.cpp.

References getPredictedRewardsForCurrentState(), grid_env, and mic::environments::Environment::isActionAllowed().

Referenced by performSingleStep().

|

protectedvirtual |

Method called at the beginning of new episode (goal: to reset the statistics etc.) - abstract, to be overridden.

Definition at line 116 of file GridworldDeepQLearning.cpp.

References mic::environments::Gridworld::environmentToString(), grid_env, mic::environments::Gridworld::initializeEnvironment(), and streamNetworkResponseTable().

|

private |

Steams the current network response - values of actions associates with consecutive agent poses.

Definition at line 150 of file GridworldDeepQLearning.cpp.

References mic::environments::Gridworld::encodeAgentGrid(), mic::environments::Gridworld::getAgentPosition(), mic::environments::Environment::getEnvironmentHeight(), mic::environments::Environment::getEnvironmentWidth(), grid_env, mic::environments::Environment::isActionAllowed(), mic::environments::Gridworld::isStateAllowed(), mic::environments::Gridworld::isStateTerminal(), mic::environments::Gridworld::moveAgentToPosition(), and neural_net.

Referenced by performSingleStep(), and startNewEpisode().

|

private |

Data collector.

Definition at line 97 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), and initialize().

|

private |

Property: future discount (should be in range 0.0-1.0).

Definition at line 110 of file GridworldDeepQLearning.hpp.

Referenced by GridworldDeepQLearning(), and performSingleStep().

|

private |

Property: variable denoting epsilon in action selection (the probability "below" which a random action will be selected). if epsilon < 0 then if will be set to 1/episode, hence change dynamically depending on the episode number.

Definition at line 121 of file GridworldDeepQLearning.hpp.

Referenced by GridworldDeepQLearning(), and performSingleStep().

|

private |

The gridworld environment.

Definition at line 100 of file GridworldDeepQLearning.hpp.

Referenced by computeBestValueForCurrentState(), finishCurrentEpisode(), getPredictedRewardsForCurrentState(), initializePropertyDependentVariables(), performSingleStep(), selectBestActionForCurrentState(), startNewEpisode(), and streamNetworkResponseTable().

|

private |

Property: neural network learning rate (should be in range 0.0-1.0).

Definition at line 115 of file GridworldDeepQLearning.hpp.

Referenced by GridworldDeepQLearning(), and performSingleStep().

|

private |

Property: name of the file to which the neural network will be serialized (or deserialized from).

Definition at line 127 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), GridworldDeepQLearning(), and initializePropertyDependentVariables().

|

private |

Property: flad denoting thether the nn should be loaded from a file (at the initialization of the task).

Definition at line 133 of file GridworldDeepQLearning.hpp.

Referenced by GridworldDeepQLearning(), and initializePropertyDependentVariables().

|

private |

Property: flad denoting thether the nn should be saved to a file (after every episode end).

Definition at line 130 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), and GridworldDeepQLearning().

|

private |

Multi-layer neural network used for approximation of the Qstate rewards.

Definition at line 136 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), getPredictedRewardsForCurrentState(), initializePropertyDependentVariables(), performSingleStep(), and streamNetworkResponseTable().

|

private |

Player position at time (t-1).

Definition at line 165 of file GridworldDeepQLearning.hpp.

Referenced by performSingleStep().

|

private |

Property: name of the file to which the statistics will be exported.

Definition at line 124 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), and GridworldDeepQLearning().

|

private |

Property: the "expected intermediate reward", i.e. reward received by performing each step (typically negative, but can be positive as all).

Definition at line 105 of file GridworldDeepQLearning.hpp.

Referenced by GridworldDeepQLearning(), and performSingleStep().

|

private |

Sum of all iterations made till now - used in statistics.

Definition at line 170 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), and initialize().

|

private |

Sum of all rewards collected till now - used in statistics.

Definition at line 175 of file GridworldDeepQLearning.hpp.

Referenced by finishCurrentEpisode(), and initialize().

|

private |

Window for displaying statistics.

Definition at line 94 of file GridworldDeepQLearning.hpp.

Referenced by initialize(), and ~GridworldDeepQLearning().