|

MachineIntelligenceCore:NeuralNets

|

|

MachineIntelligenceCore:NeuralNets

|

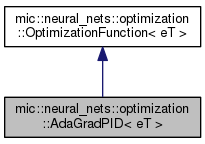

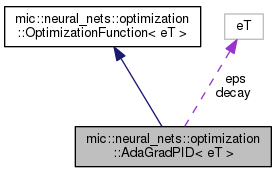

AdaGradPID - adaptive gradient descent with proportional, integral and derivative coefficients. More...

#include <GradPID.hpp>

Public Member Functions | |

| AdaGradPID (size_t rows_, size_t cols_, eT decay_=0.9, eT eps_=1e-8) | |

| mic::types::MatrixPtr< eT > | calculateUpdate (mic::types::MatrixPtr< eT > x_, mic::types::MatrixPtr< eT > dx_, eT learning_rate_=0.001) |

Public Member Functions inherited from mic::neural_nets::optimization::OptimizationFunction< eT > Public Member Functions inherited from mic::neural_nets::optimization::OptimizationFunction< eT > | |

| OptimizationFunction () | |

| virtual | ~OptimizationFunction () |

| Virtual destructor - empty. More... | |

| virtual void | update (mic::types::MatrixPtr< eT > p_, mic::types::MatrixPtr< eT > dp_, eT learning_rate_, eT decay_=0.0) |

| virtual void | update (mic::types::MatrixPtr< eT > p_, mic::types::MatrixPtr< eT > x_, mic::types::MatrixPtr< eT > y_, eT learning_rate_=0.001) |

Protected Attributes | |

| eT | decay |

| Decay ratio, similar to momentum. More... | |

| eT | eps |

| Smoothing term that avoids division by zero. More... | |

| mic::types::MatrixPtr< eT > | p_rate |

| Adaptive proportional factor (learning rate). More... | |

| mic::types::MatrixPtr< eT > | i_rate |

| Adaptive integral factor (learning rate). More... | |

| mic::types::MatrixPtr< eT > | d_rate |

| Adaptive proportional factor (learning rate). More... | |

| mic::types::MatrixPtr< eT > | Edx |

| Surprisal - for feed forward nets it is based on the difference between the prediction and target. More... | |

| mic::types::MatrixPtr< eT > | dx_prev |

| Previous value of gradients. More... | |

| mic::types::MatrixPtr< eT > | deltaP |

| Proportional update. More... | |

| mic::types::MatrixPtr< eT > | deltaI |

| Integral update. More... | |

| mic::types::MatrixPtr< eT > | deltaD |

| Derivative update. More... | |

| mic::types::MatrixPtr< eT > | delta |

| Calculated update. More... | |

AdaGradPID - adaptive gradient descent with proportional, integral and derivative coefficients.

Definition at line 180 of file GradPID.hpp.

|

inline |

Constructor. Sets dimensions, values of decay (default=0.9) and eps (default=1e-8).

| rows_ | Number of rows of the updated matrix/its gradient. |

| cols_ | Number of columns of the updated matrix/its gradient. |

Definition at line 188 of file GradPID.hpp.

References mic::neural_nets::optimization::AdaGradPID< eT >::d_rate, mic::neural_nets::optimization::AdaGradPID< eT >::delta, mic::neural_nets::optimization::AdaGradPID< eT >::deltaD, mic::neural_nets::optimization::AdaGradPID< eT >::deltaI, mic::neural_nets::optimization::AdaGradPID< eT >::deltaP, mic::neural_nets::optimization::AdaGradPID< eT >::dx_prev, mic::neural_nets::optimization::AdaGradPID< eT >::Edx, mic::neural_nets::optimization::AdaGradPID< eT >::i_rate, and mic::neural_nets::optimization::AdaGradPID< eT >::p_rate.

|

inlinevirtual |

Calculates the update according to the AdaGradPID update rule.

| x_ | Pointer to the current matrix. |

| dx_ | Pointer to current gradient of that matrix. |

| learning_rate_ | Learning rate (default=0.001). |

Implements mic::neural_nets::optimization::OptimizationFunction< eT >.

Definition at line 227 of file GradPID.hpp.

References mic::neural_nets::optimization::AdaGradPID< eT >::delta, and mic::neural_nets::optimization::AdaGradPID< eT >::Edx.

|

protected |

Adaptive proportional factor (learning rate).

Definition at line 350 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Decay ratio, similar to momentum.

Definition at line 338 of file GradPID.hpp.

|

protected |

Calculated update.

Definition at line 371 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID(), and mic::neural_nets::optimization::AdaGradPID< eT >::calculateUpdate().

|

protected |

Derivative update.

Definition at line 368 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Integral update.

Definition at line 365 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Proportional update.

Definition at line 362 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Previous value of gradients.

Definition at line 359 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Surprisal - for feed forward nets it is based on the difference between the prediction and target.

Decaying average of gradients up to time t - E[g].

Definition at line 356 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID(), and mic::neural_nets::optimization::AdaGradPID< eT >::calculateUpdate().

|

protected |

Smoothing term that avoids division by zero.

Definition at line 341 of file GradPID.hpp.

|

protected |

Adaptive integral factor (learning rate).

Definition at line 347 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().

|

protected |

Adaptive proportional factor (learning rate).

Definition at line 344 of file GradPID.hpp.

Referenced by mic::neural_nets::optimization::AdaGradPID< eT >::AdaGradPID().