|

MachineIntelligenceCore:NeuralNets

|

|

MachineIntelligenceCore:NeuralNets

|

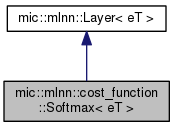

Softmax activation function. More...

#include <Softmax.hpp>

Public Member Functions | |

| Softmax (size_t size_, std::string name_="Softmax") | |

| Softmax (size_t height_, size_t width_, size_t depth_, std::string name_="Softmax") | |

| virtual | ~Softmax () |

| virtual void | resizeBatch (size_t batch_size_) |

| void | forward (bool test_=false) |

| void | backward () |

| virtual void | update (eT alpha_, eT decay_=0.0f) |

Public Member Functions inherited from mic::mlnn::Layer< eT > Public Member Functions inherited from mic::mlnn::Layer< eT > | |

| Layer (size_t input_height_, size_t input_width_, size_t input_depth_, size_t output_height_, size_t output_width_, size_t output_depth_, LayerTypes layer_type_, std::string name_="layer") | |

| virtual | ~Layer () |

| mic::types::MatrixPtr< eT > | forward (mic::types::MatrixPtr< eT > x_, bool test=false) |

| mic::types::MatrixPtr< eT > | backward (mic::types::MatrixPtr< eT > dy_) |

| template<typename loss > | |

| mic::types::MatrixPtr< eT > | calculateNumericalGradient (mic::types::MatrixPtr< eT > x_, mic::types::MatrixPtr< eT > target_y_, mic::types::MatrixPtr< eT > param_, loss loss_, eT delta_) |

| virtual void | resetGrads () |

| size_t | inputSize () |

| Returns size (length) of inputs. More... | |

| size_t | outputSize () |

| Returns size (length) of outputs. More... | |

| size_t | batchSize () |

| Returns size (length) of (mini)batch. More... | |

| const std::string | name () const |

| Returns name of the layer. More... | |

| mic::types::MatrixPtr< eT > | getParam (std::string name_) |

| mic::types::MatrixPtr< eT > | getState (std::string name_) |

| mic::types::MatrixPtr< eT > | getGradient (std::string name_) |

| void | setState (std::string name_, mic::types::MatrixPtr< eT > mat_ptr_) |

| template<typename omT > | |

| void | setOptimization () |

| const std::string | type () const |

| virtual std::string | streamLayerParameters () |

| mic::types::MatrixPtr< eT > | lazyReturnSampleFromBatch (mic::types::MatrixPtr< eT > batch_ptr_, mic::types::MatrixArray< eT > &array_, std::string id_, size_t sample_number_, size_t sample_size_) |

| mic::types::MatrixPtr< eT > | lazyReturnInputSample (mic::types::MatrixPtr< eT > batch_ptr_, size_t sample_number_) |

| mic::types::MatrixPtr< eT > | lazyReturnOutputSample (mic::types::MatrixPtr< eT > batch_ptr_, size_t sample_number_) |

| mic::types::MatrixPtr< eT > | lazyReturnChannelFromSample (mic::types::MatrixPtr< eT > sample_ptr_, mic::types::MatrixArray< eT > &array_, std::string id_, size_t sample_number_, size_t channel_number_, size_t height_, size_t width_) |

| mic::types::MatrixPtr< eT > | lazyReturnInputChannel (mic::types::MatrixPtr< eT > sample_ptr_, size_t sample_number_, size_t channel_number_) |

| mic::types::MatrixPtr< eT > | lazyReturnOutputChannel (mic::types::MatrixPtr< eT > sample_ptr_, size_t sample_number_, size_t channel_number_) |

| void | lazyAllocateMatrixVector (std::vector< std::shared_ptr< mic::types::Matrix< eT > > > &vector_, size_t vector_size_, size_t matrix_height_, size_t matrix_width_) |

| virtual std::vector < std::shared_ptr < mic::types::Matrix< eT > > > & | getInputActivations () |

| virtual std::vector < std::shared_ptr < mic::types::Matrix< eT > > > & | getInputGradientActivations () |

| virtual std::vector < std::shared_ptr < mic::types::Matrix< eT > > > & | getOutputActivations () |

| virtual std::vector < std::shared_ptr < mic::types::Matrix< eT > > > & | getOutputGradientActivations () |

Private Member Functions | |

| Softmax () | |

Friends | |

| template<typename tmp > | |

| class | mic::mlnn::MultiLayerNeuralNetwork |

Additional Inherited Members | |

Protected Member Functions inherited from mic::mlnn::Layer< eT > Protected Member Functions inherited from mic::mlnn::Layer< eT > | |

| Layer () | |

Protected Attributes inherited from mic::mlnn::Layer< eT > Protected Attributes inherited from mic::mlnn::Layer< eT > | |

| size_t | input_height |

| Height of the input (e.g. 28 for MNIST). More... | |

| size_t | input_width |

| Width of the input (e.g. 28 for MNIST). More... | |

| size_t | input_depth |

| Number of channels of the input (e.g. 3 for RGB images). More... | |

| size_t | output_height |

| Number of receptive fields in a single channel - vertical direction. More... | |

| size_t | output_width |

| Number of receptive fields in a single channel - horizontal direction. More... | |

| size_t | output_depth |

| Number of filters = number of output channels. More... | |

| size_t | batch_size |

| Size (length) of (mini)batch. More... | |

| LayerTypes | layer_type |

| Type of the layer. More... | |

| std::string | layer_name |

| Name (identifier of the type) of the layer. More... | |

| mic::types::MatrixArray< eT > | s |

| States - contains input [x] and output [y] matrices. More... | |

| mic::types::MatrixArray< eT > | g |

| Gradients - contains input [x] and output [y] matrices. More... | |

| mic::types::MatrixArray< eT > | p |

| Parameters - parameters of the layer, to be used by the derived classes. More... | |

| mic::types::MatrixArray< eT > | m |

| Memory - a list of temporal parameters, to be used by the derived classes. More... | |

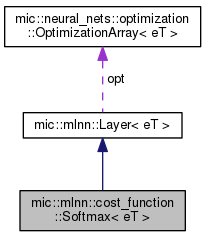

| mic::neural_nets::optimization::OptimizationArray < eT > | opt |

| Array of optimization functions. More... | |

| std::vector< std::shared_ptr < mic::types::Matrix< eT > > > | x_activations |

| Vector containing activations of input neurons - used in visualization. More... | |

| std::vector< std::shared_ptr < mic::types::Matrix< eT > > > | dx_activations |

| Vector containing activations of gradients of inputs (dx) - used in visualization. More... | |

| std::vector< std::shared_ptr < mic::types::Matrix< eT > > > | y_activations |

| Vector containing activations of output neurons - used in visualization. More... | |

| std::vector< std::shared_ptr < mic::types::Matrix< eT > > > | dy_activations |

| Vector containing activations of gradients of outputs (dy) - used in visualization. More... | |

Softmax activation function.

| eT | Template parameter denoting precision of variables (float for calculations/double for testing). |

Definition at line 38 of file Softmax.hpp.

|

inline |

Creates a Softmax layer - reduced number of parameters.

| size_ | Length of the input/output data. |

| name_ | Name of the layer. |

Definition at line 46 of file Softmax.hpp.

|

inline |

Creates a Softmax layer.

| height_ | Height of the input/output sample. |

| width_ | Width of the input/output sample. |

| depth_ | Depth of the input/output sample. |

| name_ | Name of the layer. |

Definition at line 60 of file Softmax.hpp.

|

inlinevirtual |

Virtual destructor - empty.

Definition at line 76 of file Softmax.hpp.

|

inlineprivate |

Private constructor, used only during the serialization.

Definition at line 163 of file Softmax.hpp.

|

inlinevirtual |

Abstract method responsible for processing the gradients from outputs to inputs (i.e. in the opposite direction). To be overridden in the derived classes.

Implements mic::mlnn::Layer< eT >.

Definition at line 124 of file Softmax.hpp.

|

inlinevirtual |

Abstract method responsible for processing the data from the inputs to outputs. To be overridden in the derived classes.

| test | Test mode - used for dropout-alike regularization techniques. |

Implements mic::mlnn::Layer< eT >.

Definition at line 94 of file Softmax.hpp.

|

inlinevirtual |

Changes the size of the batch - resizes e and sum.

| New | size of the batch. |

Reimplemented from mic::mlnn::Layer< eT >.

Definition at line 82 of file Softmax.hpp.

|

inlinevirtual |

Performs the update according to the calculated gradients and injected optimization method. Empty as this is a "const" layer.

| alpha_ | Learning rate - passed to the optimization functions of all layers. |

| decay_ | Weight decay rate (determining that the "unused/unupdated" weights will decay to 0) (DEFAULT=0.0 - no decay). |

Implements mic::mlnn::Layer< eT >.

Definition at line 143 of file Softmax.hpp.

|

friend |

Definition at line 158 of file Softmax.hpp.