Scenario

The operations team needs to remove duplicate events from the stock movements topic, for processing by systems that cannot behave idempotently.

Tip: To learn more about deduplication, see the Apache Flink documentation.

Deduplicate node

You can remove duplicate events by using the deduplicate node. The deduplicate node provides a simpler, built-in way to remove duplicate events without having to write SQL yourself.

Before you begin

The instructions in this tutorial use the Tutorial environment, which includes a selection of topics each with a live stream of events, created to allow you to explore features in IBM Event Automation. Following the setup instructions to deploy the demo environment gives you a complete instance of IBM Event Automation that you can use to follow this tutorial for yourself.

Versions

This tutorial uses the following versions of Event Automation capabilities. Screenshots may differ from the current interface if you are using a newer version.

- Event Streams 12.1.0

- Event Endpoint Management 11.6.4

- Event Processing 1.4.6

Instructions

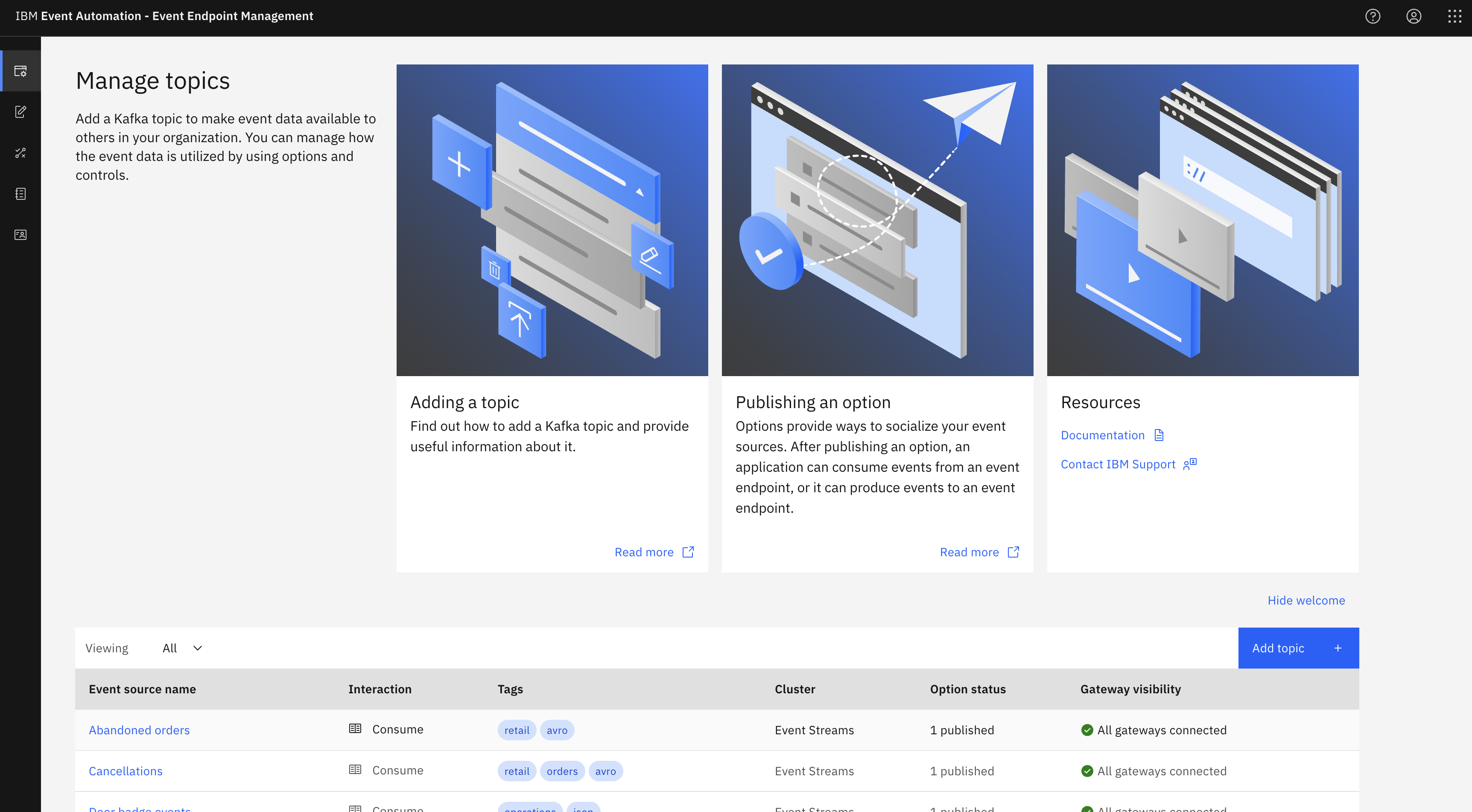

Step 1: Discover the source topic to use

For this scenario, you are processing an existing stream of events. You will start by identifying the topic.

-

Go to the Event Endpoint Management catalog.

If you need a reminder about how to access the Event Endpoint Management catalog you can review Accessing the tutorial environment.

If there are no topics in the catalog, you might need to complete the tutorial setup step to populate the catalog.

-

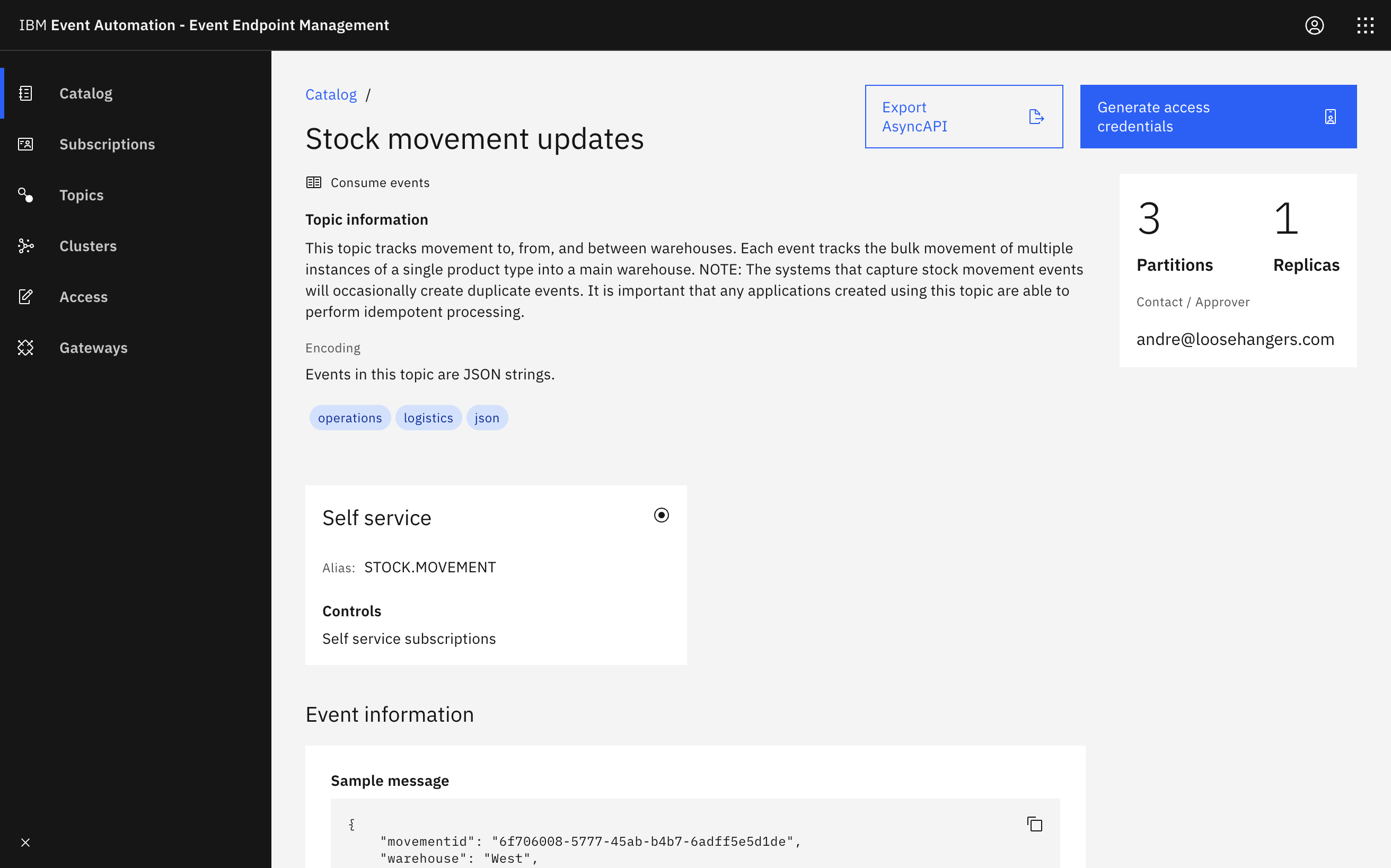

The

Stock movement updatestopic contains the events used in this tutorial.Tip: Notice that the topic information describes the issue that we are addressing in this tutorial. Documenting potential issues and considerations for using topics is essential for enabling effective reuse.

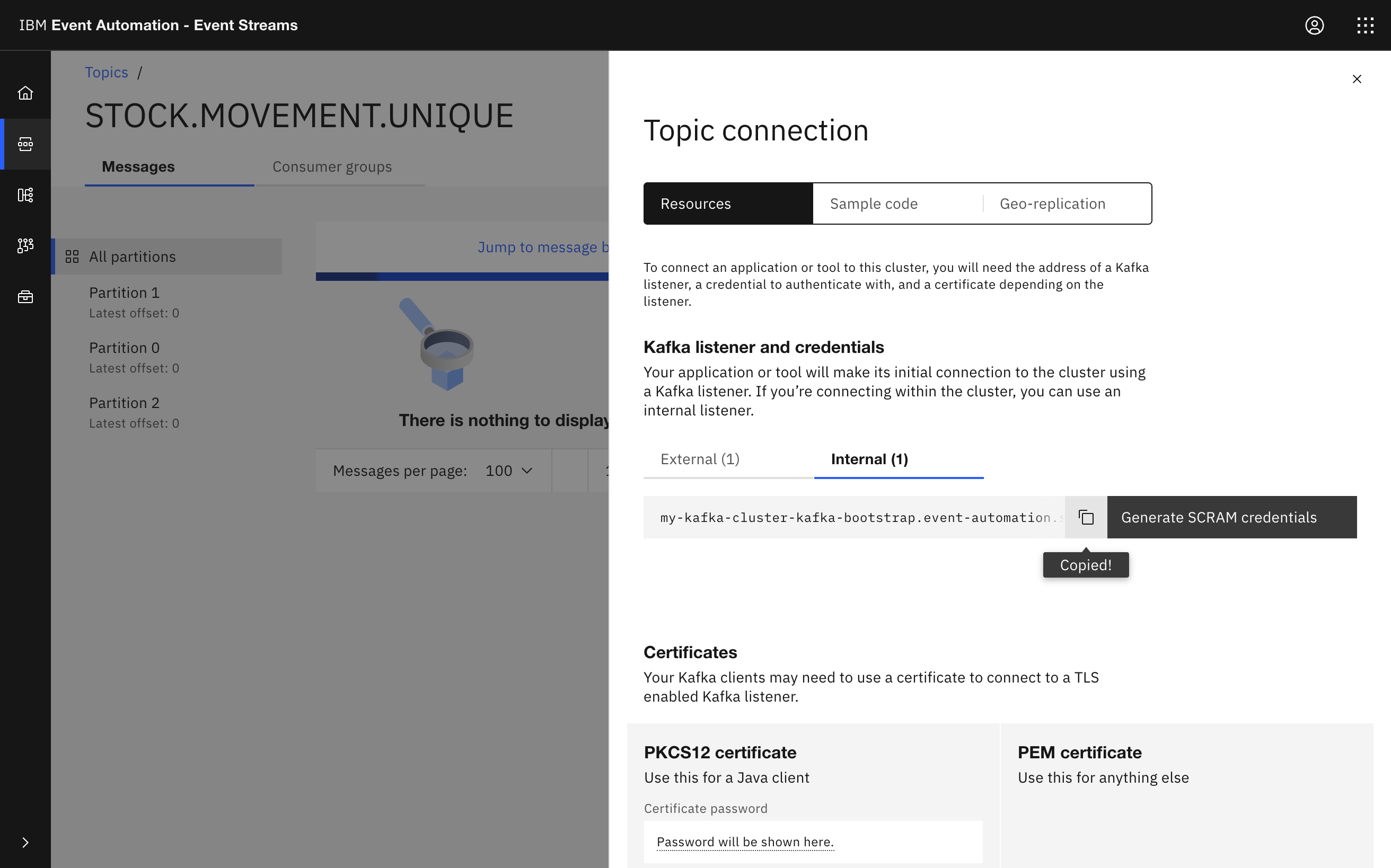

Step 2: Create a topic for the deduplicated events

You can configure a processing flow to write the deduplicated events to a separate topic, which you can then use as a source for systems that are unable to process idempotently.

The next step is to create this topic.

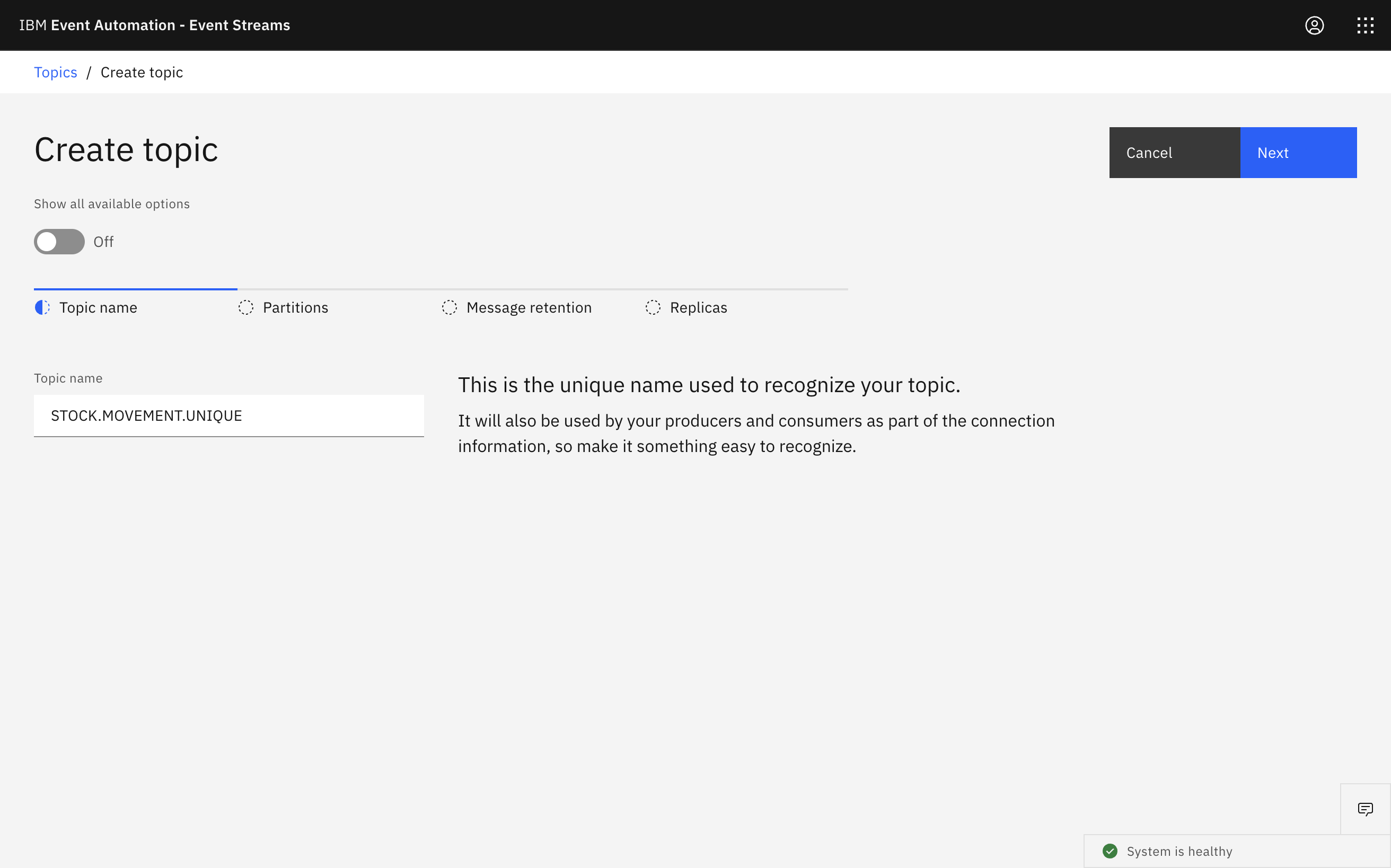

-

Go to the Event Streams topics manager.

If you need a reminder about how to access the Event Streams web UI, you can review Accessing the tutorial environment.

-

Create a topic called

STOCK.MOVEMENT.UNIQUE. -

Create the topic with 3 partitions to match the

STOCK.MOVEMENTsource topic.

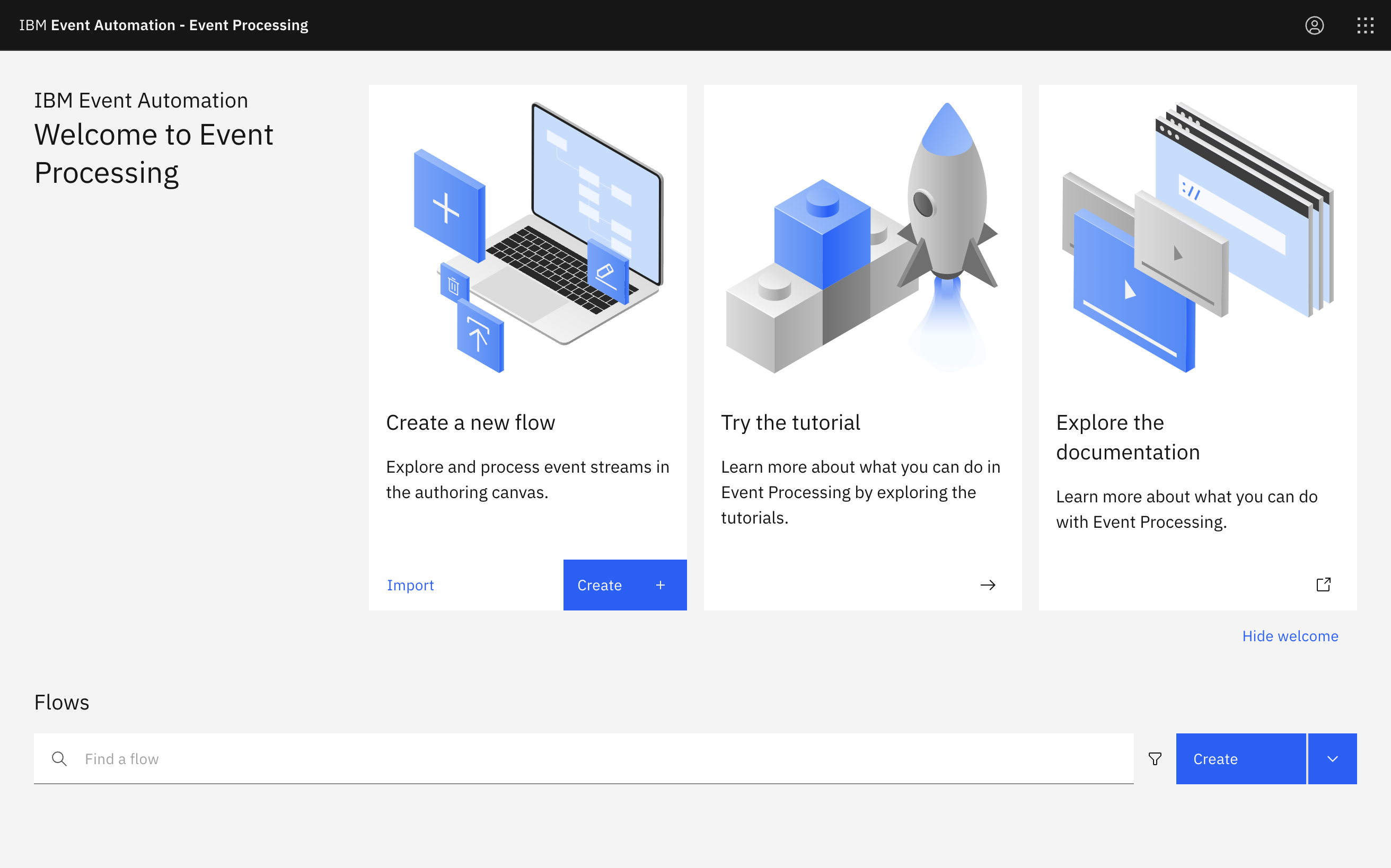

Step 3: Create a processing flow

The Event Processing authoring UI makes it easy to start new projects. You can now write your Flink SQL in the Event Processing UI.

-

Go to the Event Processing home page.

If you need a reminder about how to access the Event Processing home page, you can review Accessing the tutorial environment.

-

Create a flow, and give it a name and description to explain that you will use it to deduplicate the events on the stock movements topic.

Step 4: Provide a source of events

The next step is to bring the stream of events to process into the flow.

-

Update the Event source node.

Hover over the node and click

Edit to configure the node.

-

Use the server address information and Generate access credentials button on the

Stock movement updatestopic page in the Event Endpoint Management catalog from Step 1 to configure the event source node. -

Select the

STOCK.MOVEMENTtopic. -

The message format is auto-selected and the sample message is auto-populated in the Message format pane.

-

Enter the node name as

Stock movements.

Tip: If you need a reminder about how to configure an event source node, you can follow the Filter events based on particular properties tutorial.

Step 5: Deduplicate events with deduplicate node

You can use the deduplicate node to remove duplicate events that produces an append-only stream.

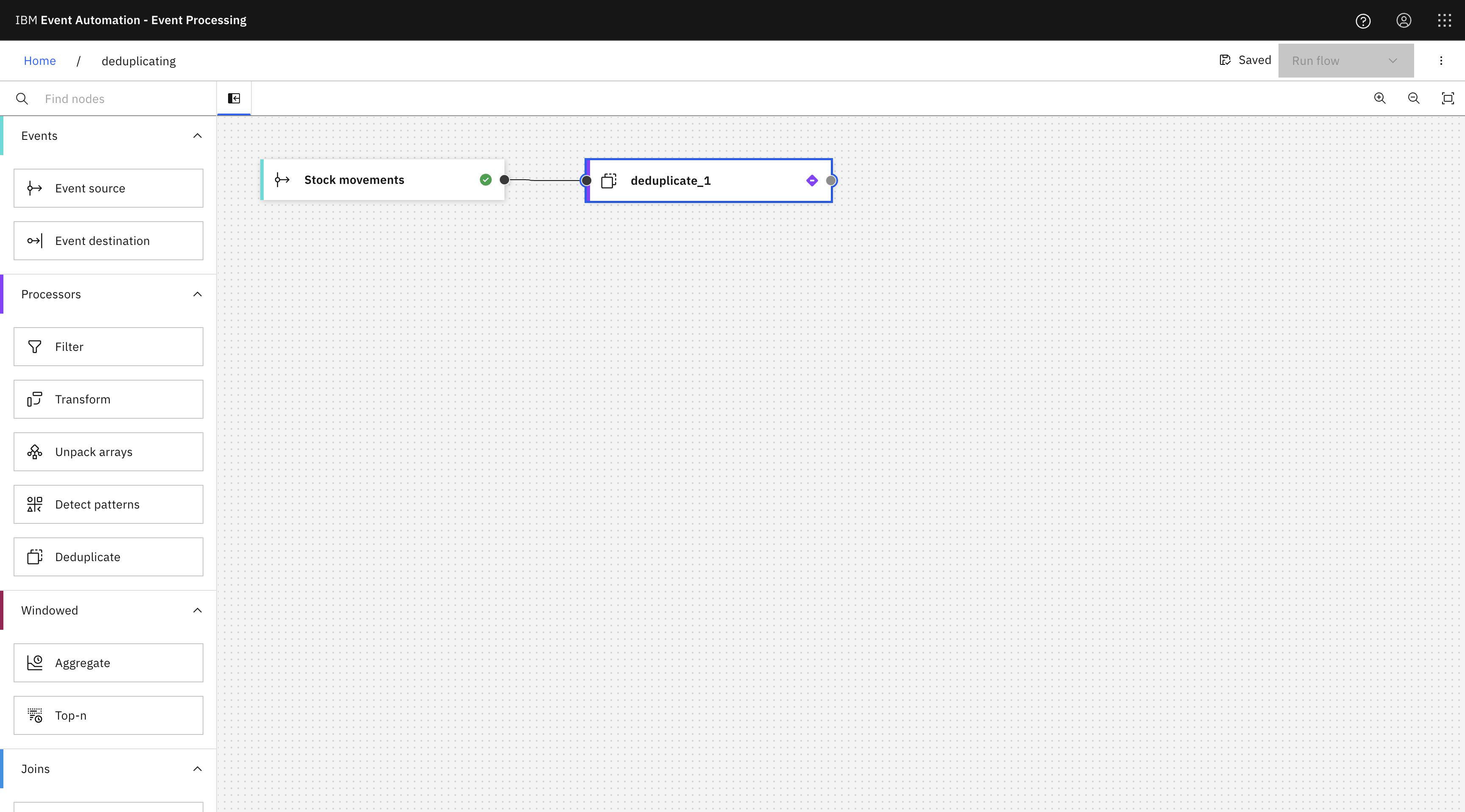

-

Drag a deduplicate node into the canvas and connect the node to the

Stock movementsnode. You can find this in the Processors section of the left panel. -

Hover over the deduplicate node, and click

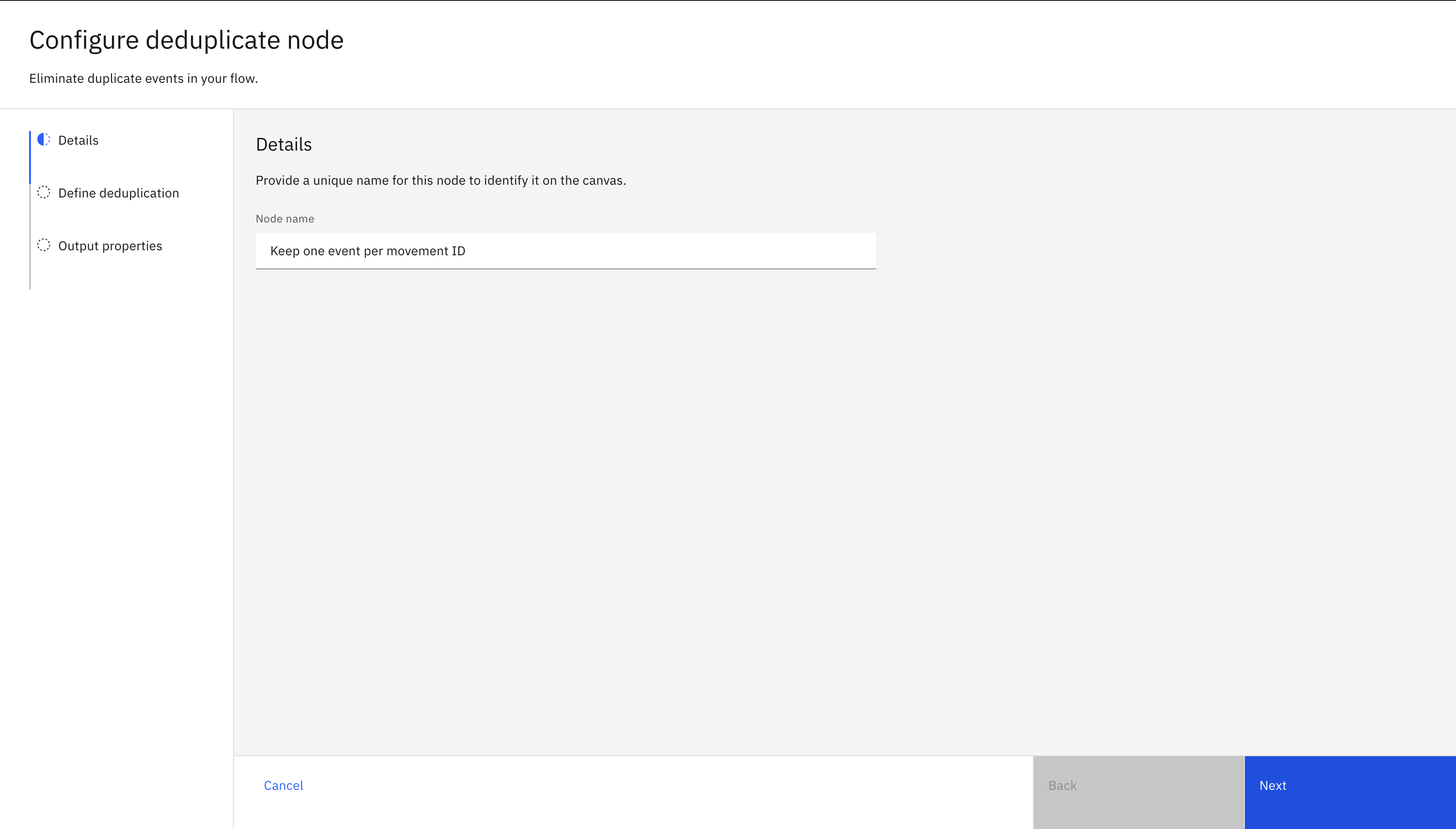

Edit to configure the node.

-

In the Details pane, enter

Keep one event per movement IDas the node name. Click Next. -

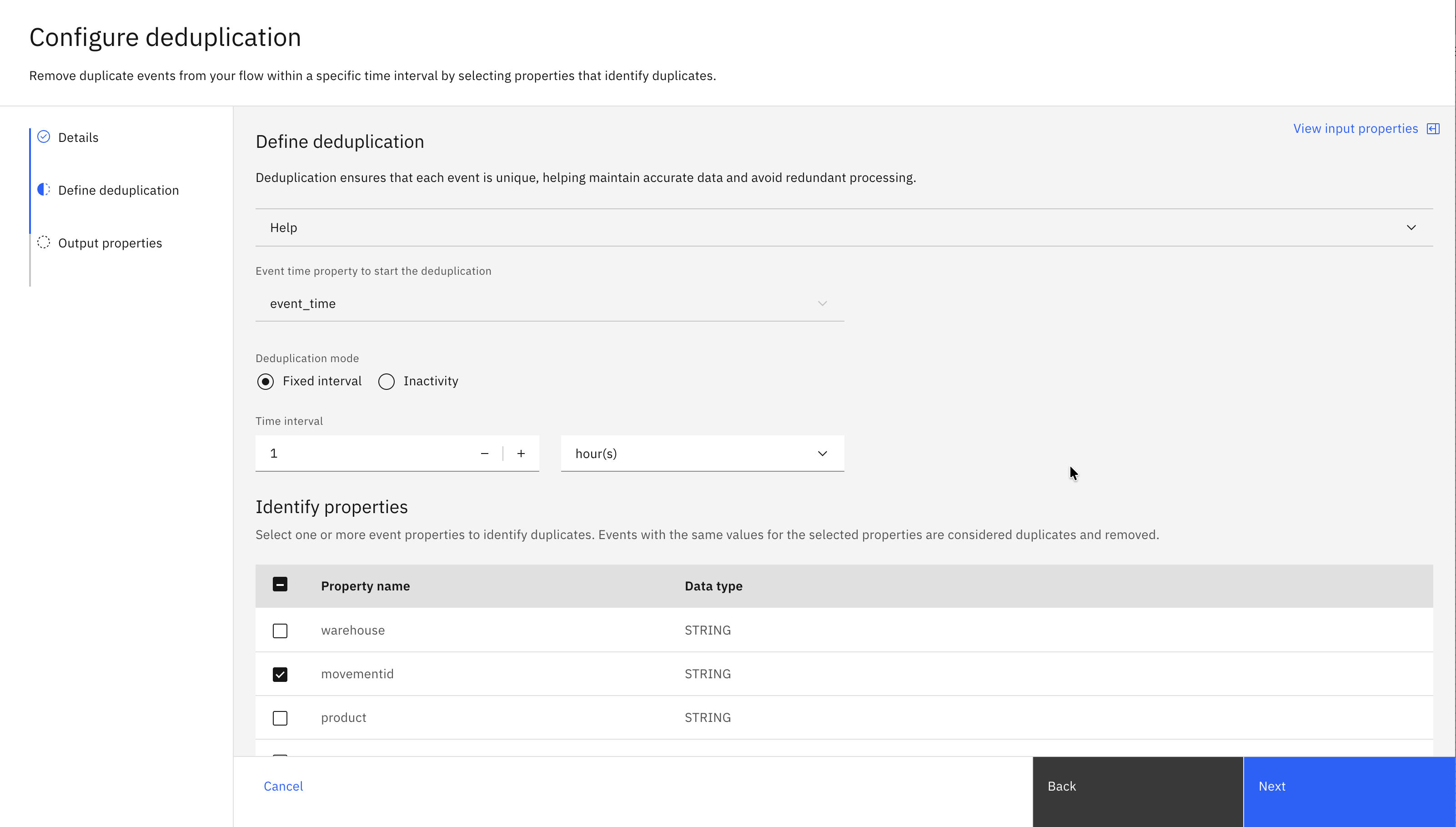

In the Define deduplication pane, the

event_timeproperty is automatically selected because the input contains only a single event time property. This property is used to determine the order of events when identifying duplicates. -

In the Deduplication mode, select Fixed interval to remove duplicates within a fixed window that starts from the first event.

-

In the Time interval field, specify a time interval for deduplication.

For example, for a time interval of one hour, only the first event per unique key property is kept, and any duplicates are discarded. After the interval ends, the deduplication cycle starts again.

-

Select the property

movementidfrom the Identify properties table that uniquely identifies each stock movement. Click Next. -

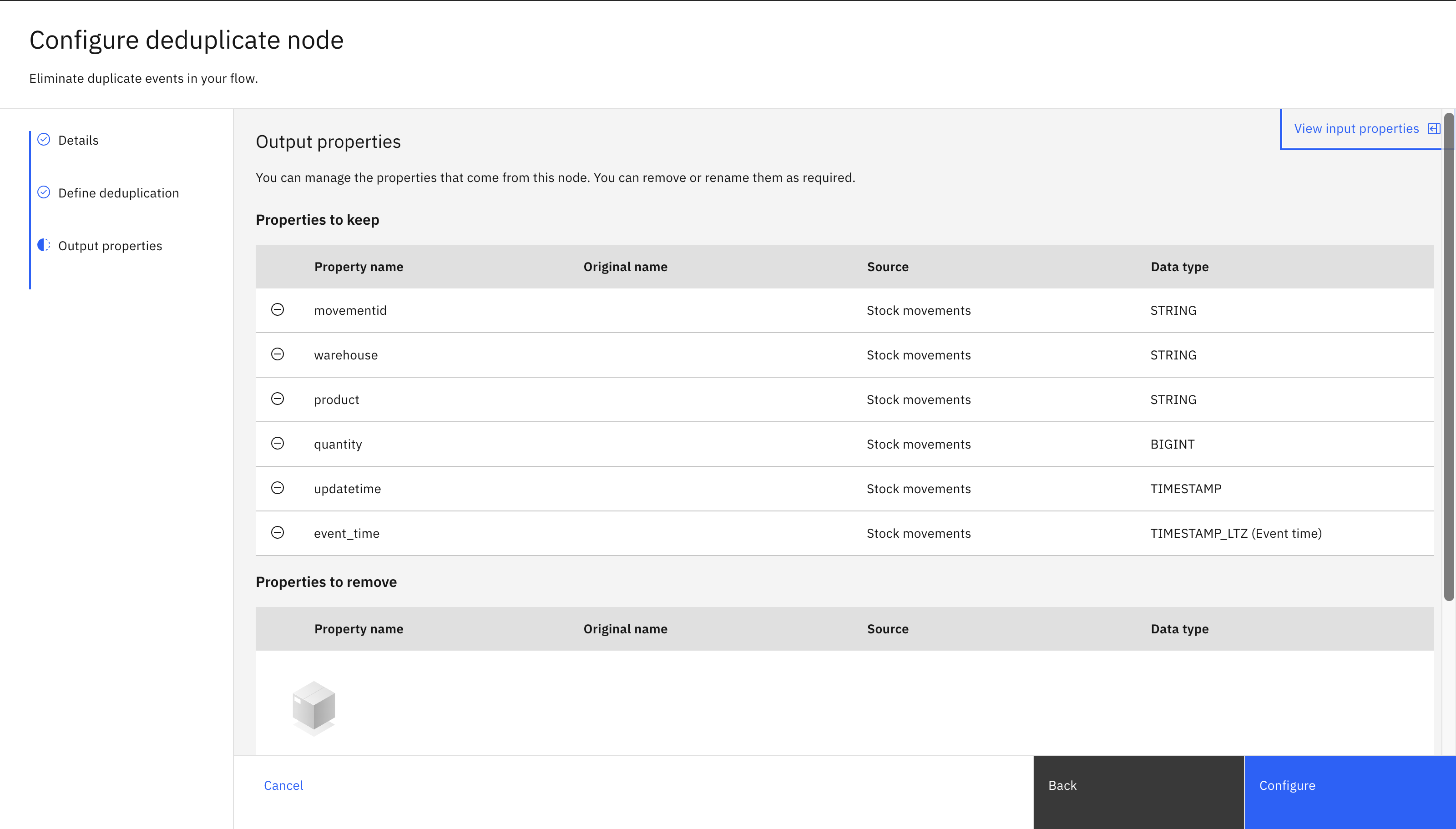

In the Output properties pane, you can choose the output properties that are useful to return.

-

Click Configure to finalize the deduplicate.

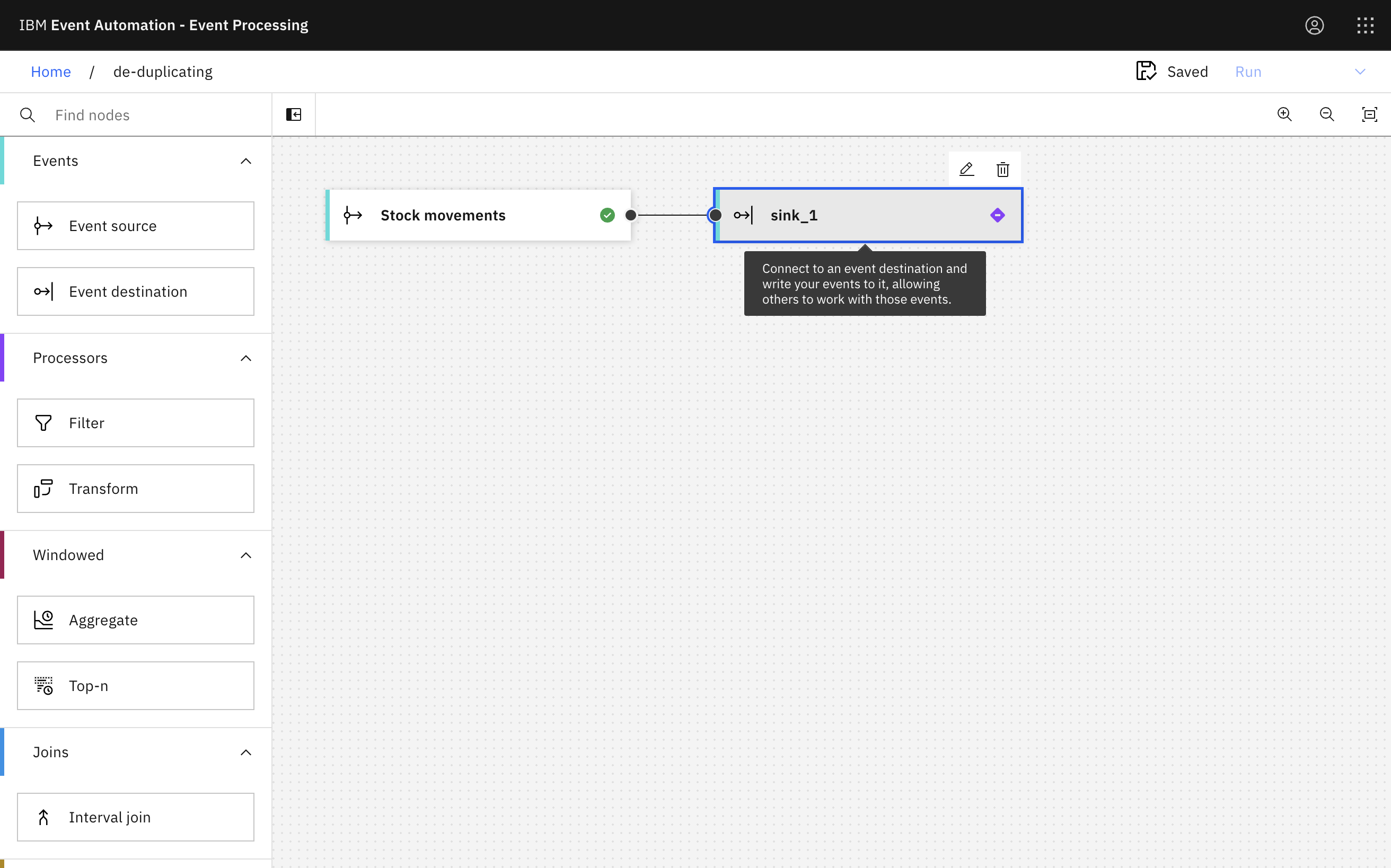

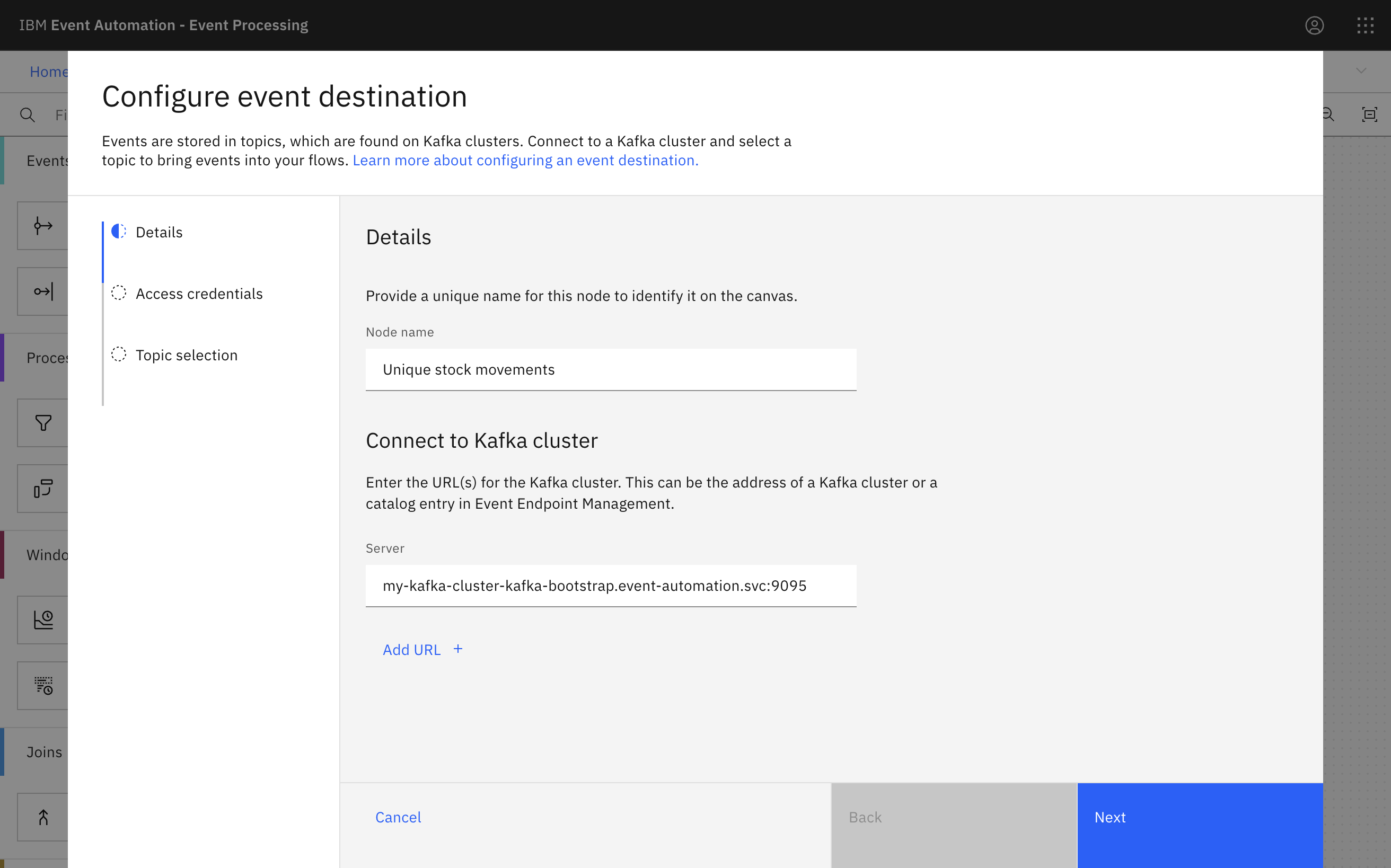

Step 6: Write your events to a Kafka topic

The results must be written to a different Kafka topic. You can use the event destination node to write your events to a Kafka topic.

-

Create an event destination node by dragging one onto the canvas. You can find this in the Events section of the left panel.

-

Enter the node name as

Unique stock movements. -

Configure the event destination node by using the internal server address from Event Streams.

-

Use the username and password for the

kafka-demo-appsuser for accessing the new topic.If you need a reminder of the password for the

kafka-demo-appsuser, you can review the Accessing Kafka topics section of the Tutorial Setup instructions. -

Choose the

STOCK.MOVEMENT.UNIQUEtopic created in Step 2. -

Click Configure to finalize the event destination node.

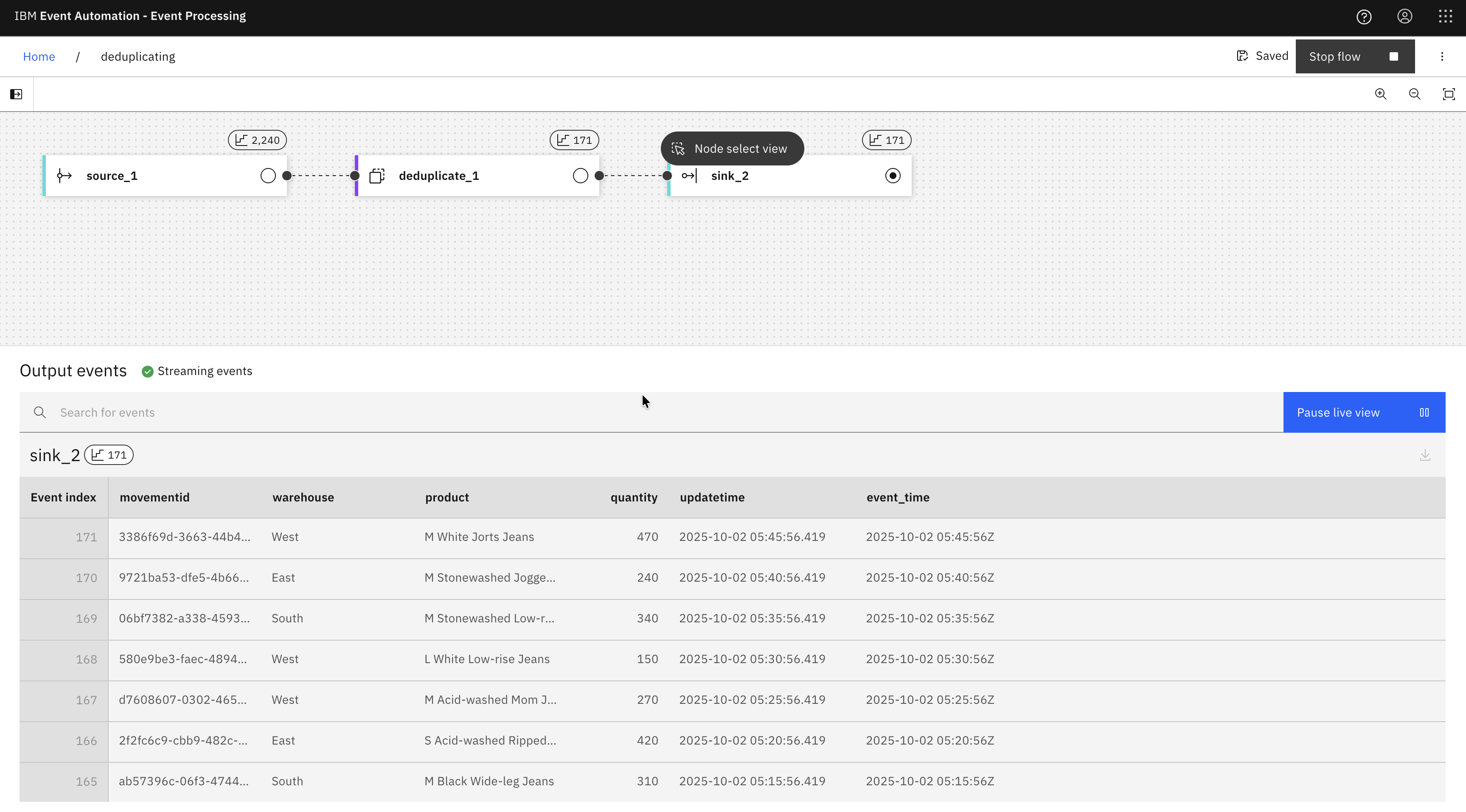

Step 7: Test the flow

The next step is to run your event processing flow and view the deduplicated events:

Use the Run menu, and select Include historical to run your flow on the history of stock movement events available on this Kafka topic.

A live view of results from your flow is updated as new events are emitted onto the STOCK.MOVEMENT.UNIQUE topic.

The running flow continuously processes and deduplicates new events as they are produced to the STOCK.MOVEMENT topic.

Step 9: Confirm the results

You can verify the results by examining the destination topic.

-

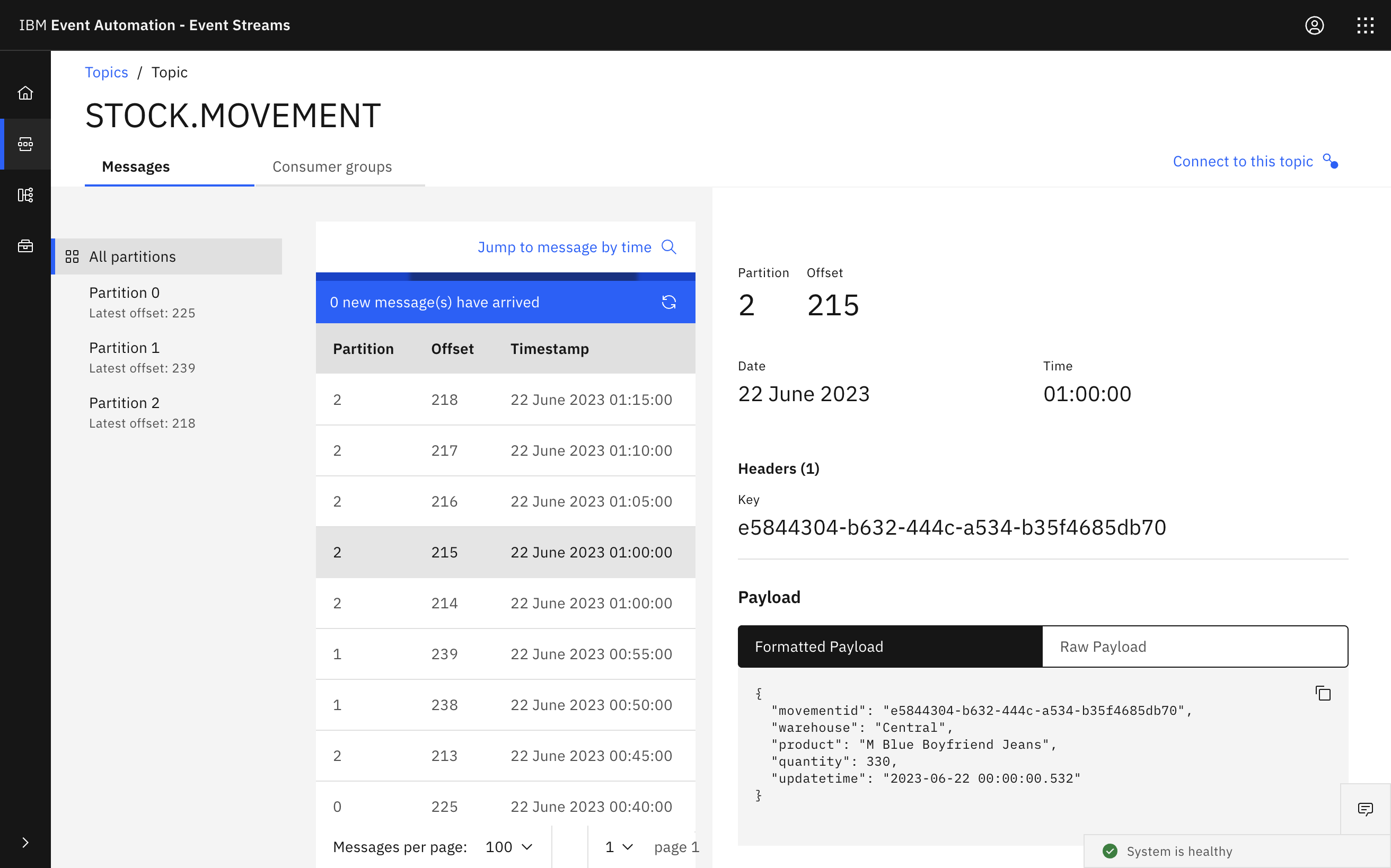

View the original events on the

STOCK.MOVEMENTtopic. -

Use the timestamp to identify a duplicate event.

Approximately one in ten of the events on this topic are duplicated, so looking through ten or so messages should be enough to find an example.

-

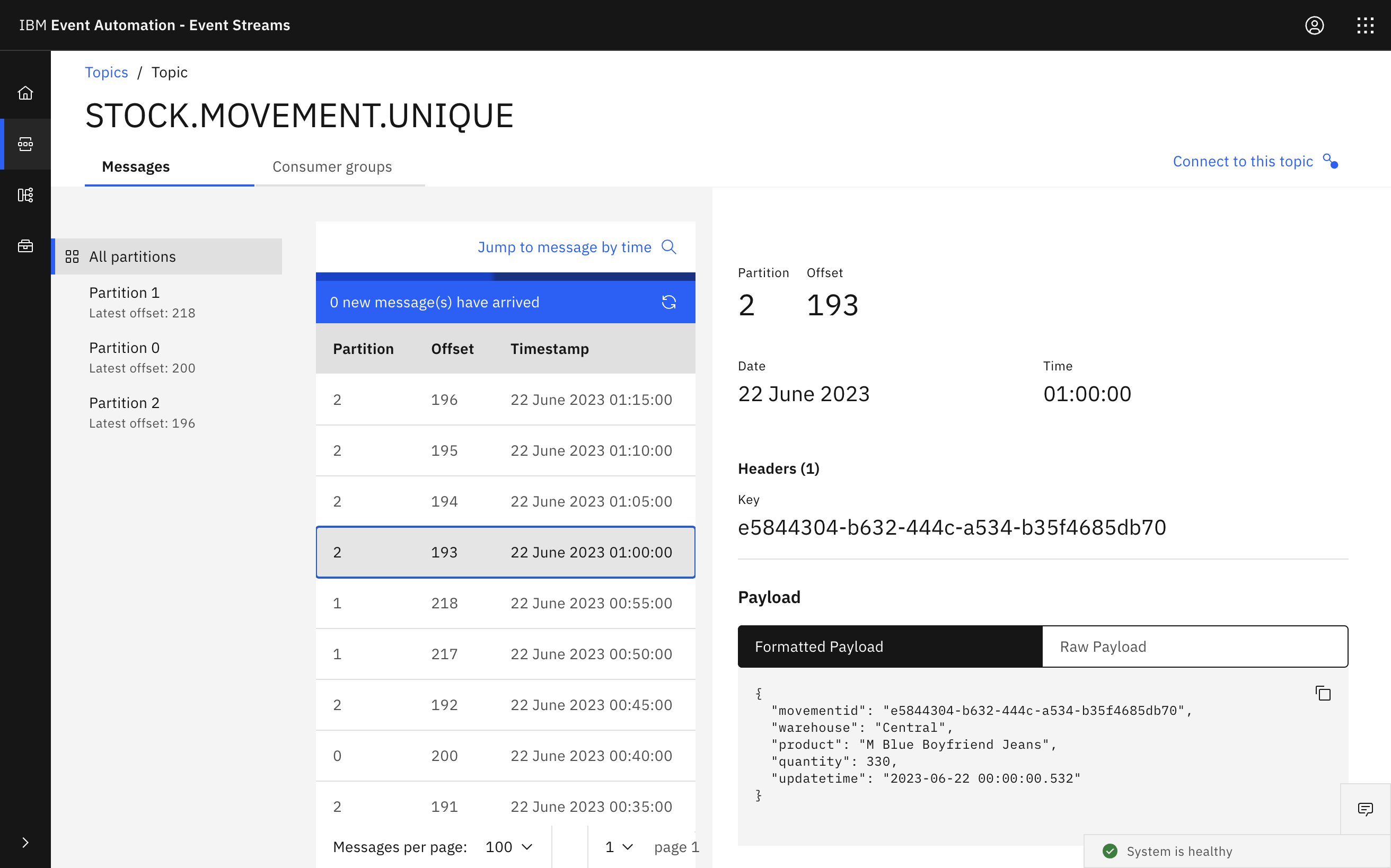

Examine the event with the same timestamp on the

STOCK.MOVEMENT.UNIQUEtopic.You can see that there is only a single event with that timestamp and contents on the destination topic, as the duplicate event was filtered out.

You can use this new deduplicated topic as the source for an Event Processing flow, or any other Kafka application.

Recap

You have created a processing flow that uses deduplicate node to preprocess the events on a Kafka topic. The results are written to a different Kafka topic.