Aggregate

Aggregates enable you to process events over a time-window. This enables a summary view of a situation that can be useful to identify overall trends.

Transform

When processing events we can modify events to create additional properties, which are derived from the event. Transforms work on individual events in the stream.

Scenario: Track how many products of each type are sold per hour

In this scenario, we identify the product that has sold the most units in each hourly window. This could be used to drive a constantly updating event streams view of “Trending Products”.

Before you begin

The instructions in this tutorial use the Tutorial environment, which includes a selection of topics each with a live stream of events, created to allow you to explore features in IBM Event Automation. Following the setup instructions to deploy the demo environment gives you a complete instance of IBM Event Automation that you can use to follow this tutorial for yourself.

Versions

This tutorial uses the following versions of Event Automation capabilities. Screenshots may differ from the current interface if you are using a newer version.

- Event Streams 12.1.0

- Event Endpoint Management 11.6.4

- Event Processing 1.4.6

Instructions

Step 1: Create a flow

-

Go to the Event Processing home page.

If you need a reminder of how to access the Event Processing home page, you can review Accessing the tutorial environment.

-

Create a flow, and give it a name and description that explains you will use it to track how many products are sold of each type.

Step 2: Provide a source of events

The next step is to bring the stream of events to process into the flow. We will reuse the topic connection information from an earlier tutorial.

-

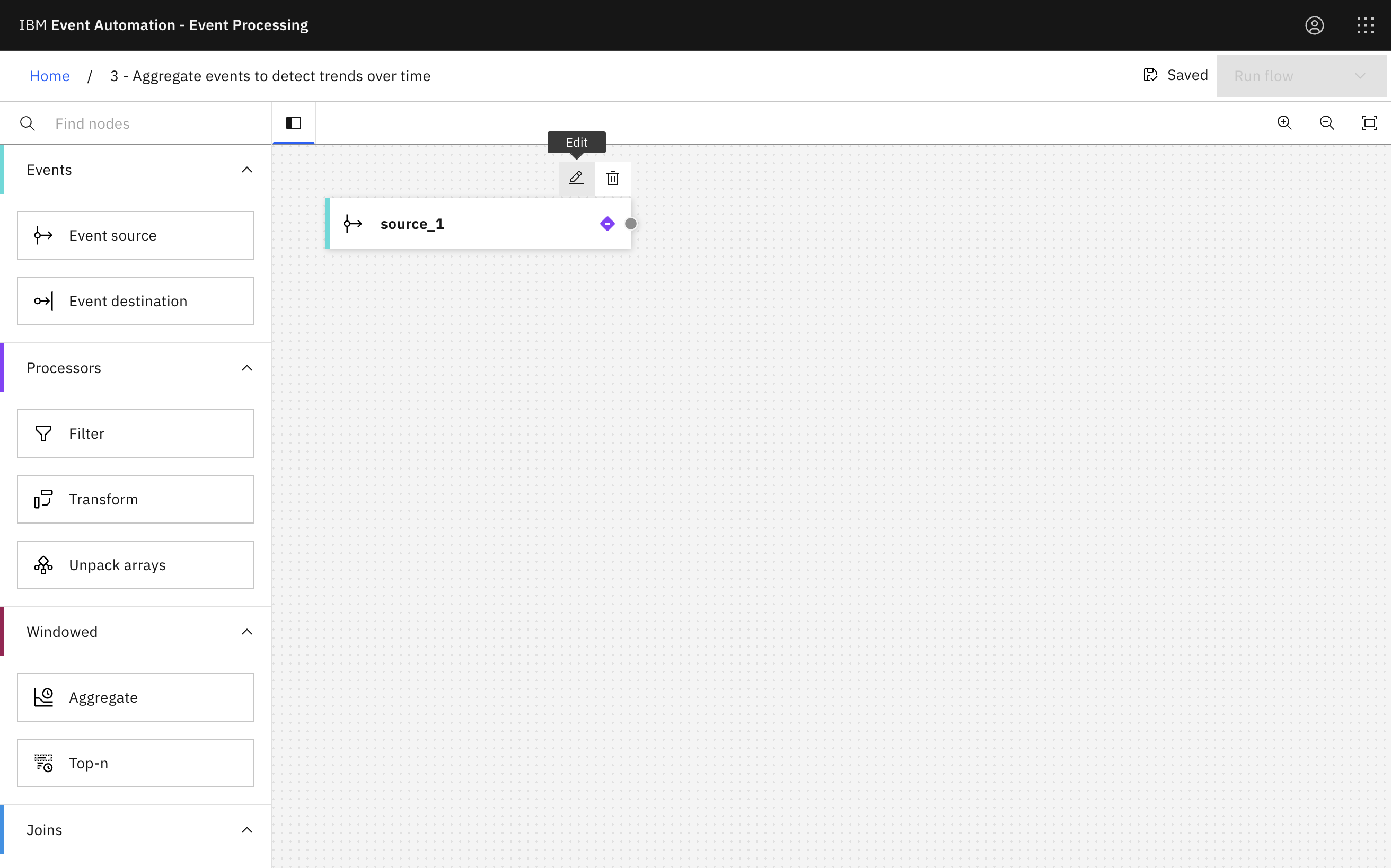

Update the Event source node.

Hover over the node and click

Edit to configure the node.

-

Choose the

ORDERStopic that you used in the Identify orders from a specific region tutorial.Tip: If you haven’t followed that tutorial, you can click Add new event source instead, and follow the Provide a source of events steps in the previous tutorial to define a new Event source from scratch.

Click Next.

-

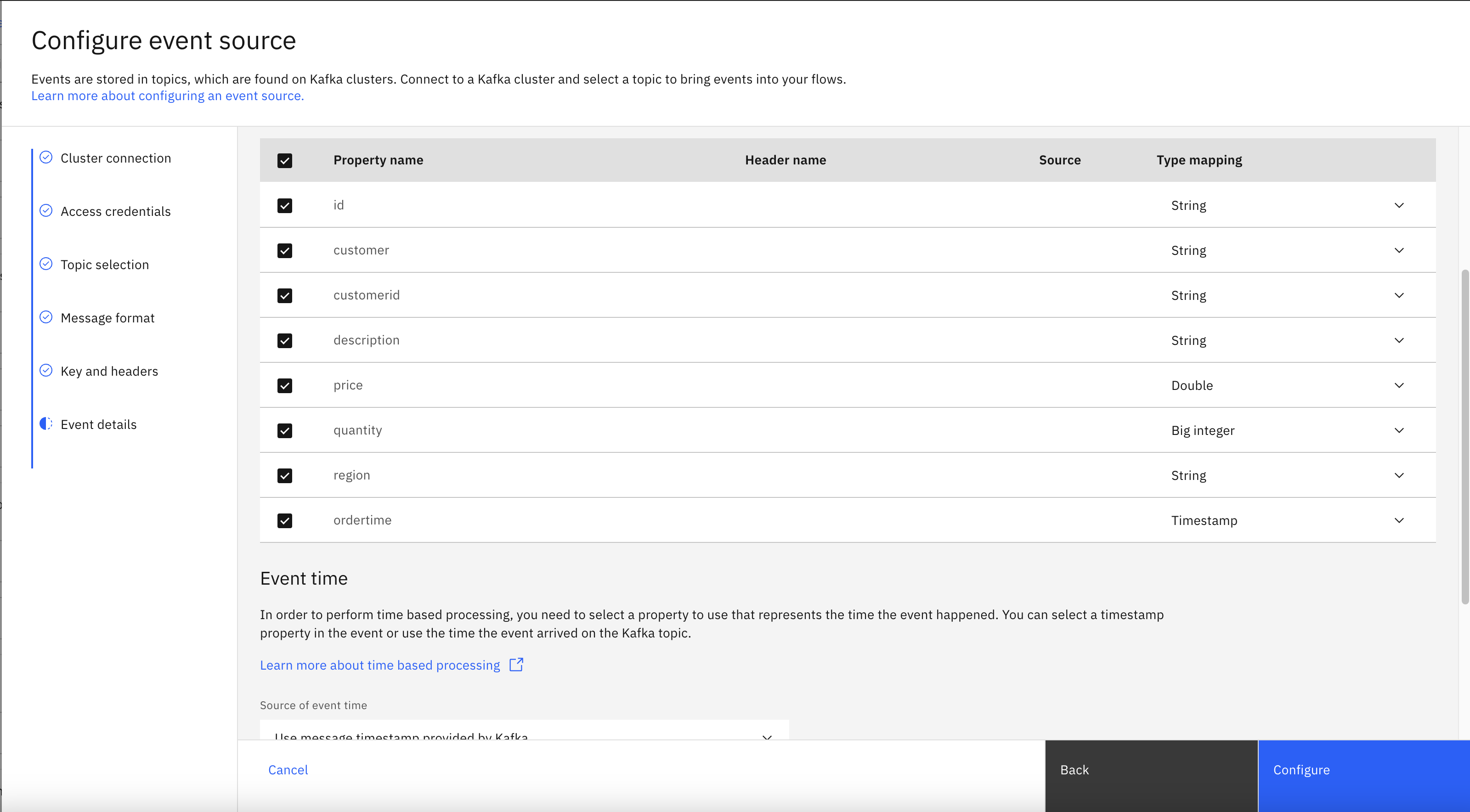

In the Event details pane, the schema for events on this topic defined before is displayed.

Click Configure.

Step 3: Extract product type from events

The product description value in the events includes several attributes of the jeans that are sold - the size, material, and style. We would like to aggregate the data based on this information. This data in the description is combined as a single string in a consistent way. This means we can extract them using regular expressions.

In the next step, we extract product type into a separate property so that we can use them to filter and aggregate events later in the flow.

-

Add a Transform node and link it to your event source.

Create a transform node by dragging one onto the canvas. You can find this in the Processors section of the left panel.

Click and drag from the small gray dot on the event source to the matching dot on the transform node.

-

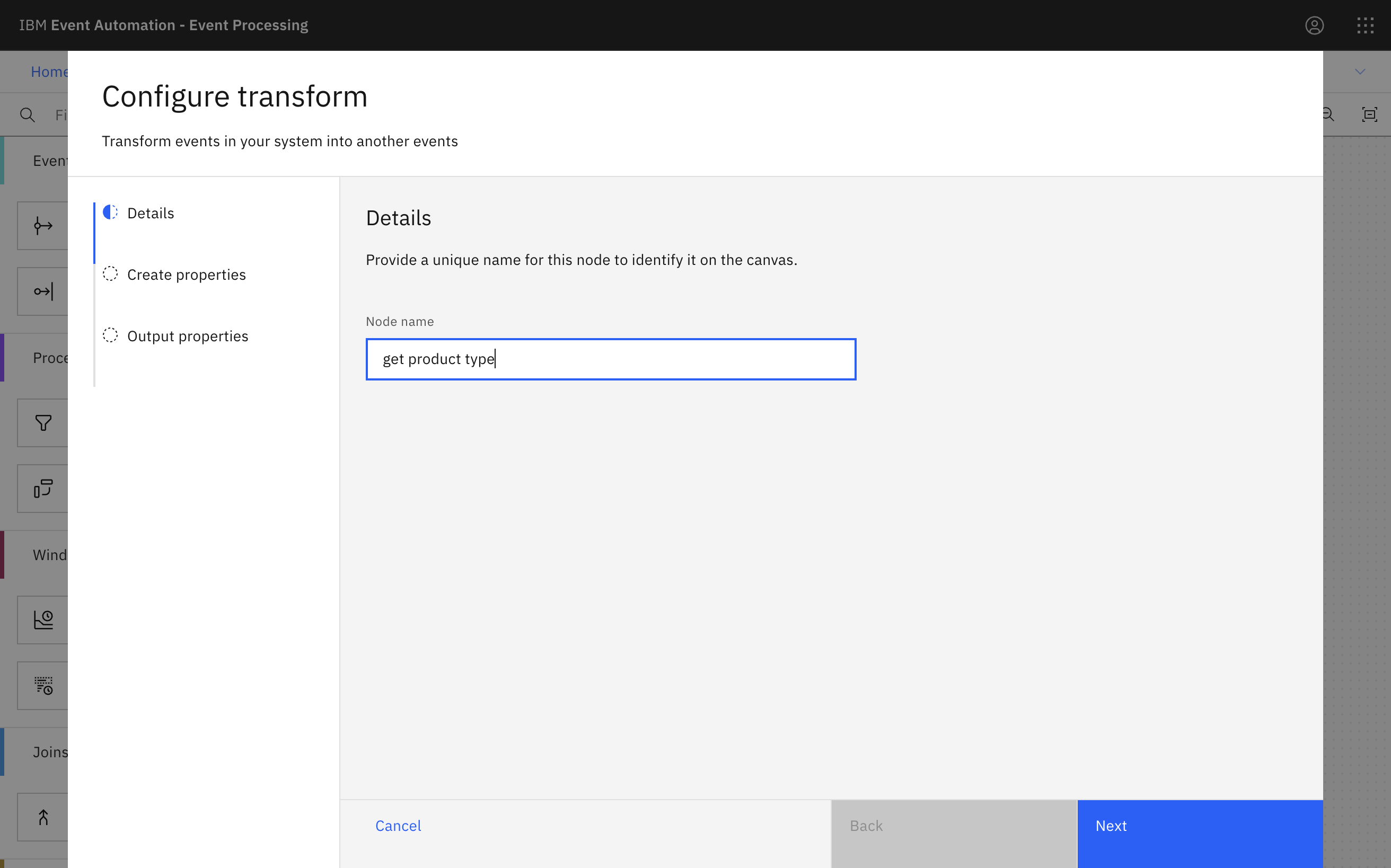

Give the transform node a name that describes what it will do:

get product type.Hover over the transform node and click

Edit to configure the node.

-

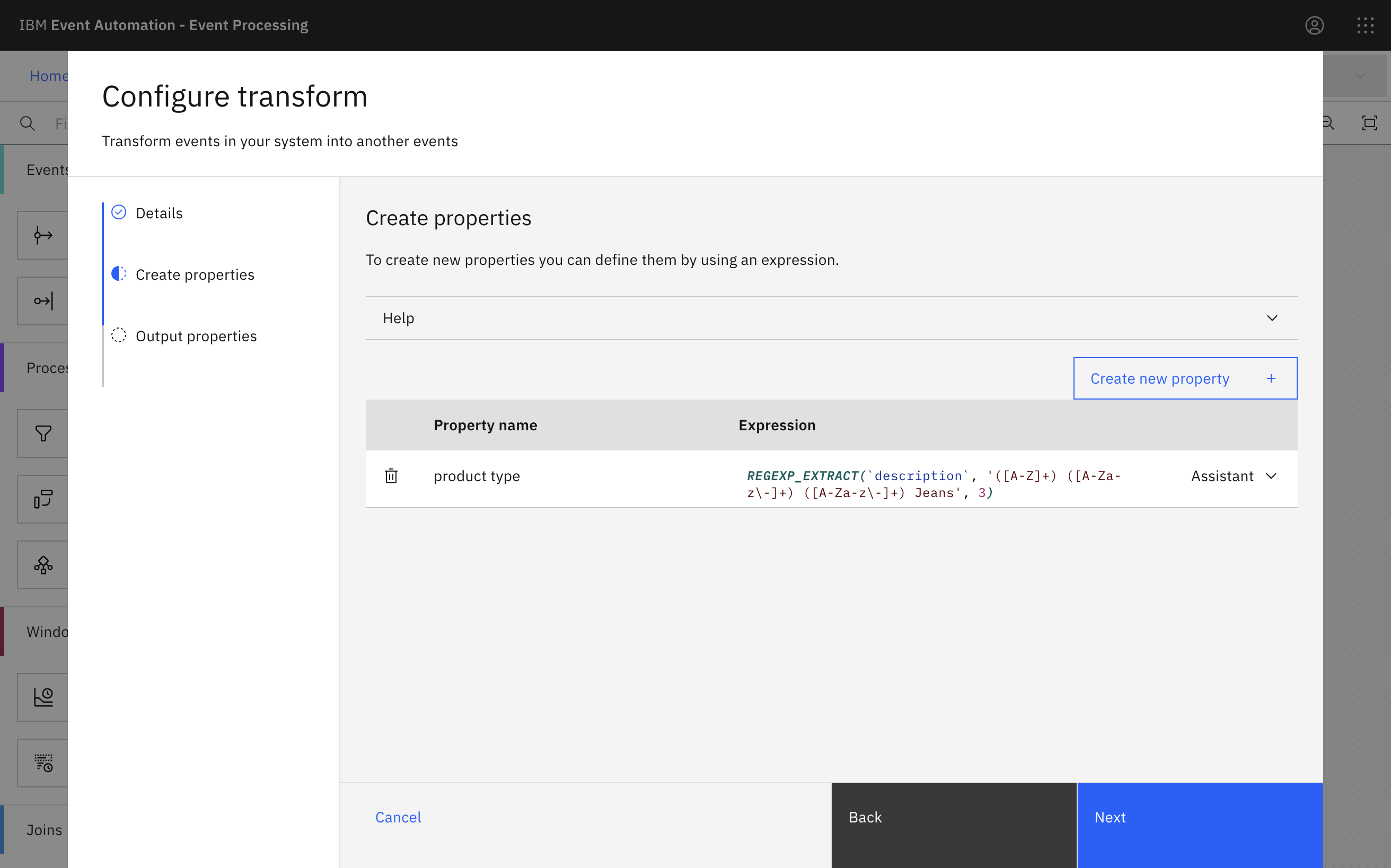

Add a new property for the product type that you will generate with a regular expression.

Click Create new property.

Name the property

product type.Use the assistant to choose the

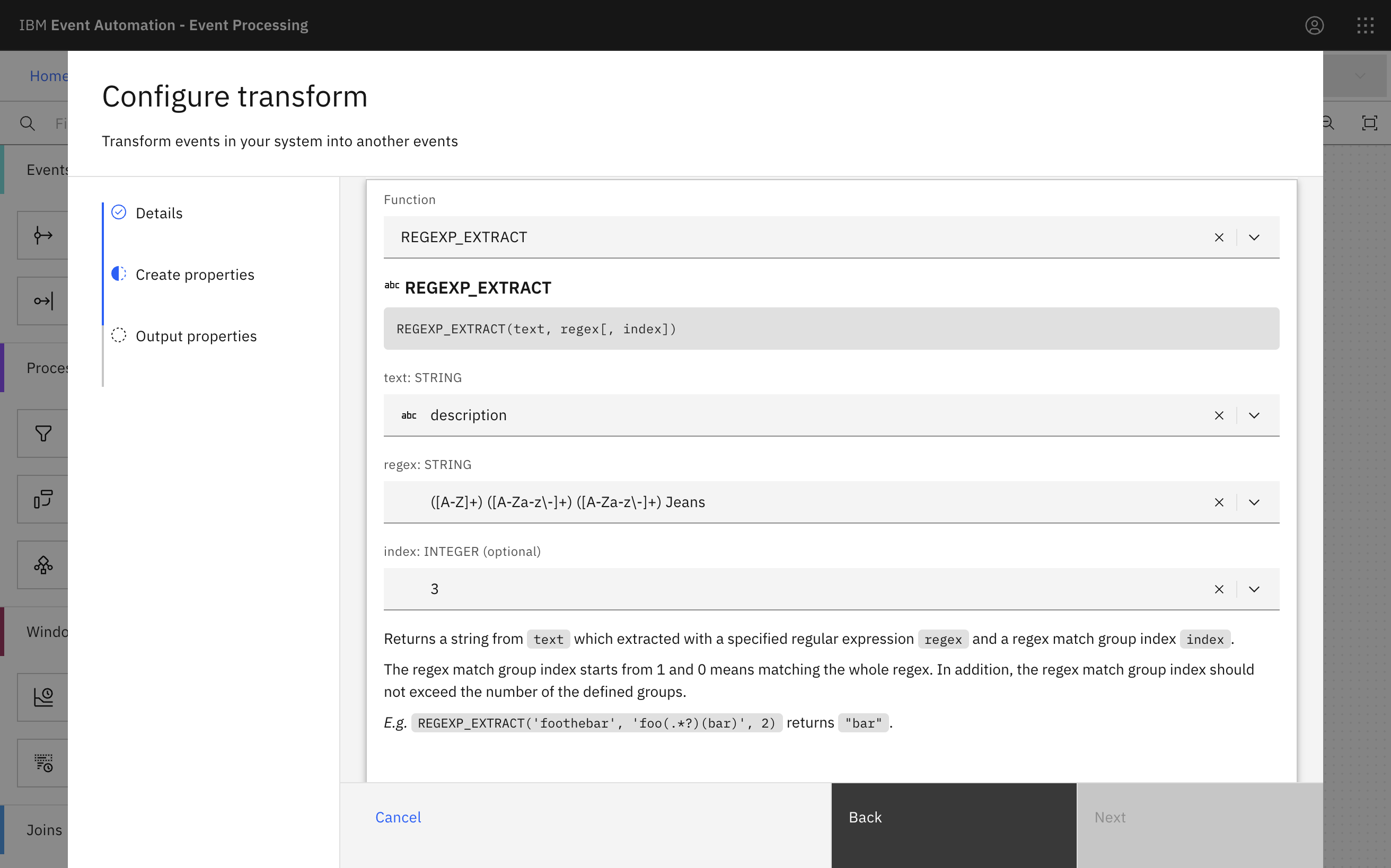

REGEXP_EXTRACTfunction from the list.Did you know? The

REGEXP_EXTRACTfunction allows you to extract data from a text property using regular expressions. -

Define the regular expression that extracts the product type from the description.

Product descriptions are all made up of four words.

Some examples:

XXS Navy Cargo JeansM Stonewashed Capri JeansXL White Bootcut JeansS Acid-washed Jogger Jeans

Each word contains similar information in each description:

word 1: Size. This is made up of one-or-more uppercase letters.

word 2: Material or color, made up of a mixed-case word, optionally with a hyphen.

word 3: The type of jeans, made up of a mixed-case word, optionally with a hyphen.

word 4: The text “Jeans”.

Create a regular expression that extracts the third word from the description text, by filling in the assistant form with the following values:

text :

descriptionThis identifies which property in the order events that contains the text that you want to apply the regular expression to.

regex :

([A-Z]+) ([A-Za-z\-]+) ([A-Za-z\-]+) JeansThis can be used to match the description as shown above - describing the four words that every description contains.

index :

3This specifies that you want the new product type property to contain the third word in the description.

-

Click Insert into expression to complete the assistant.

-

As you aren’t modifying existing properties, click Next.

-

Click Configure to finalize the transform.

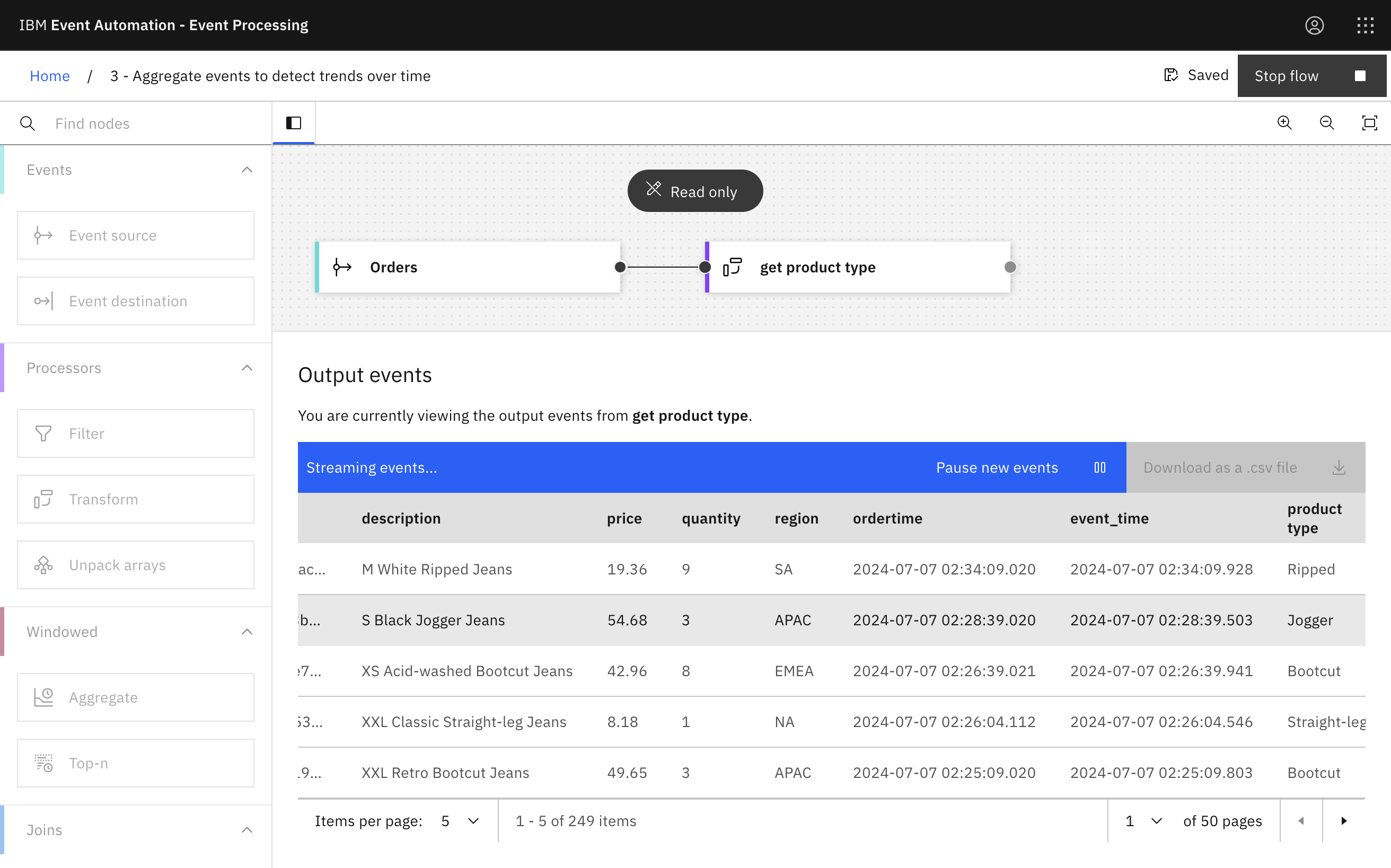

Step 4: Test the flow

The next step is to test your event processing flow and view the results.

-

Use the Run menu, and select Include historical to run your filter on the history of order events available on this Kafka topic.

Tip: It is good to regularly test as you develop your event processing flow to confirm that the last node you have added is doing what you expected.

Note the new property for product type is populated with the data extracted from the description property.

-

When you have finished reviewing the results, you can stop this flow.

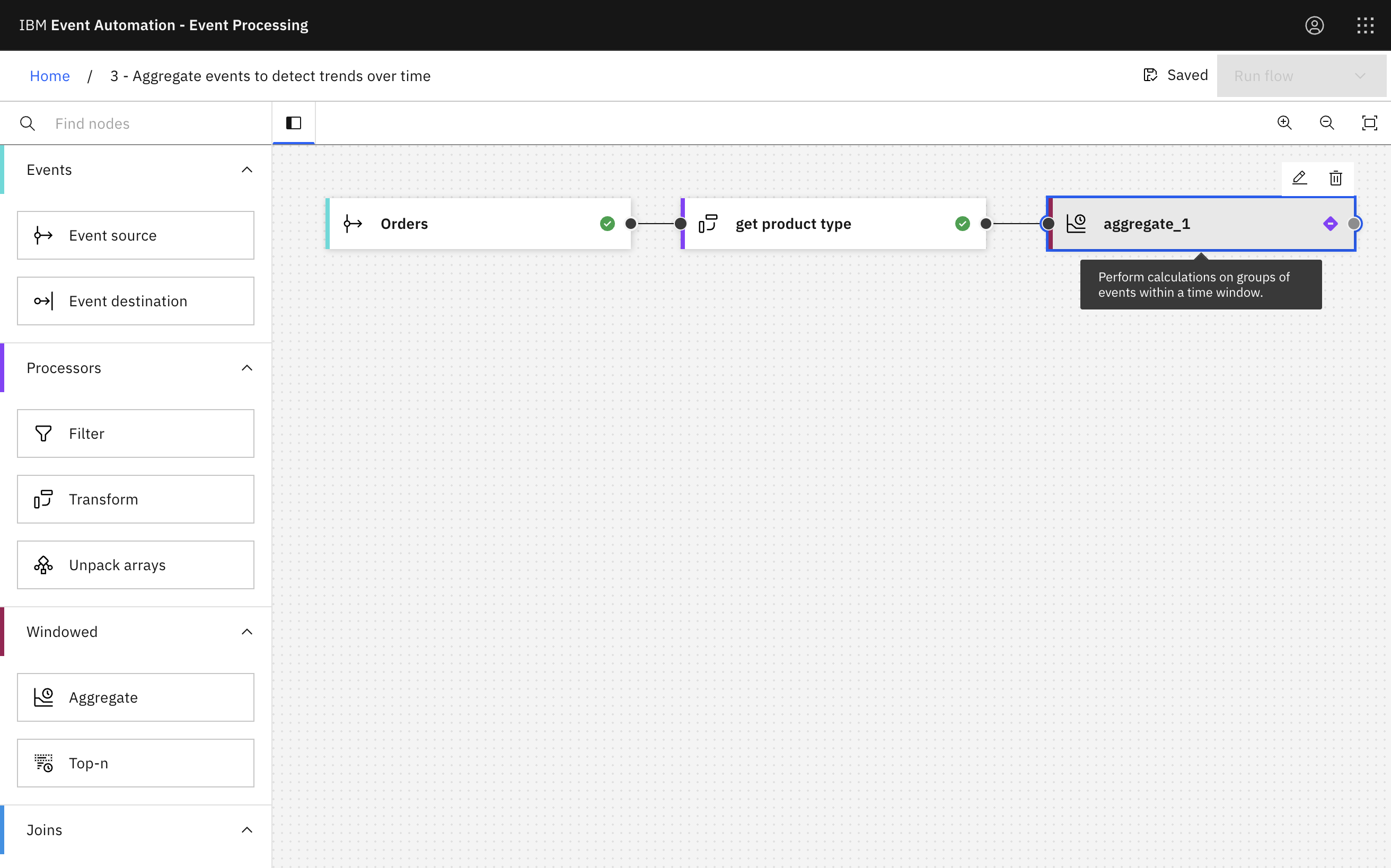

Step 5: Count the number of events of each type

Now that you have transformed the stream of events to include the type attribute, the next step is to total the number of items sold (using this new property).

-

Add an Aggregate node and link it to your event source.

Create an aggregate node by dragging one onto the canvas. You can find this in the Windowed section of the left panel.

Click and drag from the small gray dot on the transform to the matching dot on the aggregate node.

-

Name the aggregate node to show that it will count the number of units sold of each type:

hourly sales by type.Hover over the aggregate node and click

Edit to configure the node.

-

Specify a 1-hour window.

-

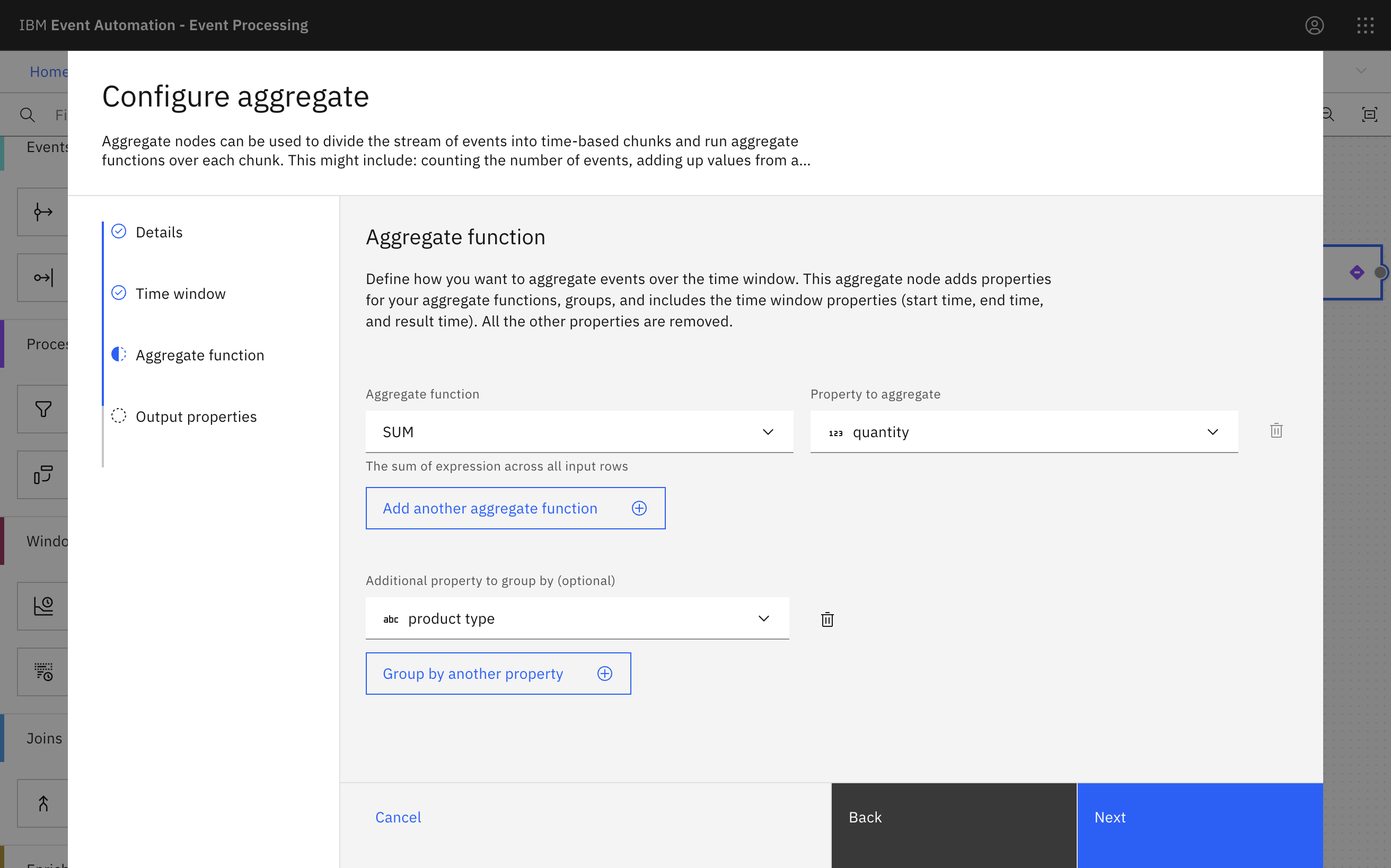

Sum the number of items sold in each hour, grouped by product type.

Select

SUMas the aggregate function.The property we are adding up is

quantity- the number of items that are sold in each order. This will add up the number of items sold in each order that happens within the time window.Finally, select the new property

product typeas the property to group by. This will add up the number of items sold of each type. -

Rename the new aggregate properties.

Tip: It can be helpful to adjust the name of properties to something that will make sense to you, such as describing the SUM property as

total sales. -

Click Configure to finalize the aggregate.

Step 6: Test the flow

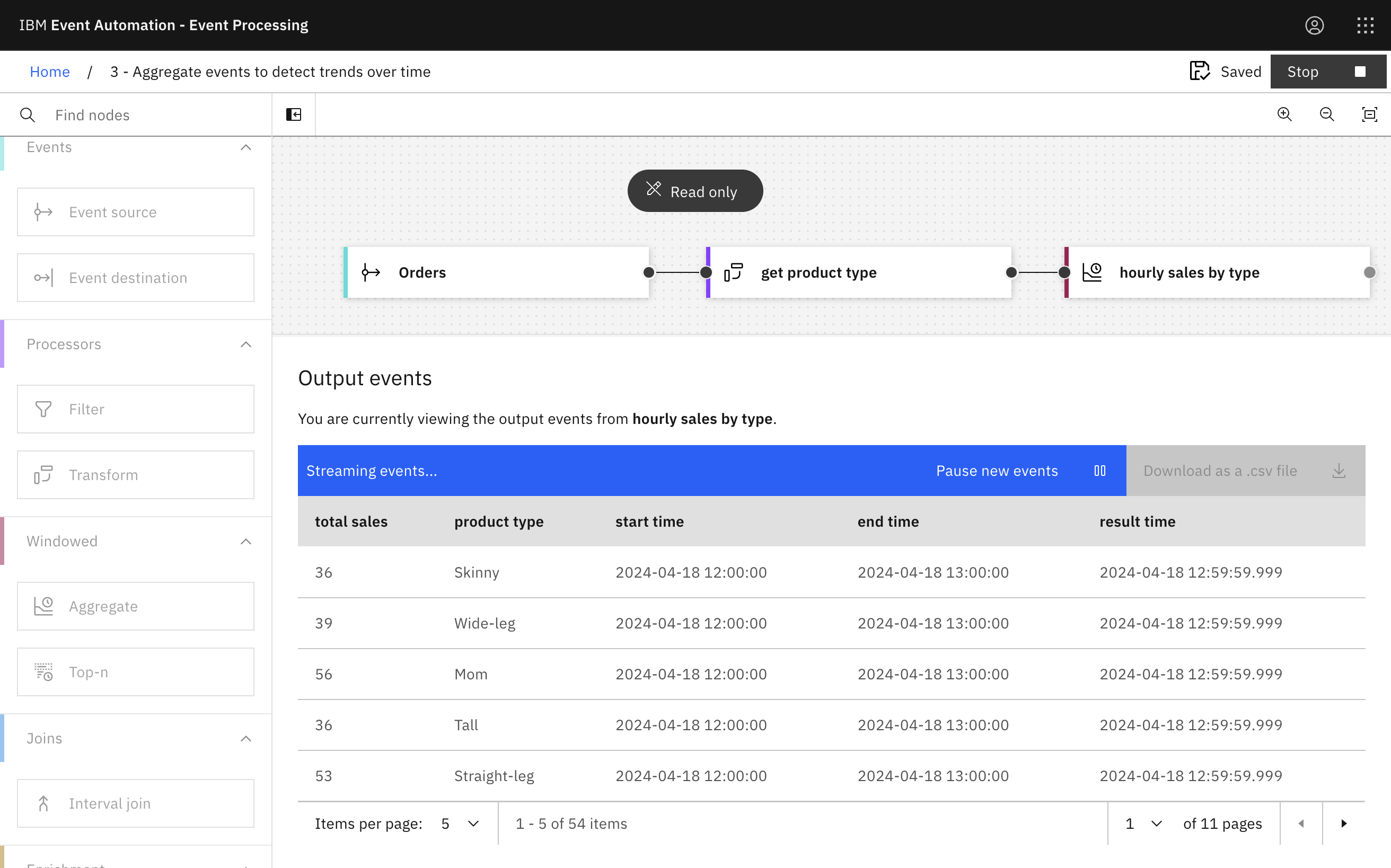

The final step is to run your event processing flow and view the results.

-

Use the Run menu, and select Include historical to run your filter on the history of order events available on this Kafka topic.

The output window shows that your aggregate is returning the total number of items for each type of product within each window of time.

-

When you have finished reviewing the results, you can stop this flow.

Recap

You used a transform node to dynamically extract a property from the description within the order events.

You used an aggregate node to count the events based on this extracted property, grouping into one-hour time windows.

Next step

In the next tutorial, you will try an interval join to correlate related events from multiple different event streams.