In this getting started tutorial, we’ll take you through how to use Event Processing to create a simple flow. The flow uses a filter node to select a subset of events.

For demonstration purposes, we use the scenario of a clothing company who want to move away from reviewing quarterly sales reports to reviewing orders in their region as they occur. Identifying large orders in real time helps the clothing company identify changes that are needed in sales forecasts much earlier. This information can also be fed back into their manufacturing cycle so that they can better respond to demand.

The steps in this getting started tutorial should take you about 10 minutes to complete.

Before you begin

- This getting started scenario assumes that all the capabilities in Event Automation are installed.

- Connect with your cluster administrator and get the server address for the topic you have to access.

- Keep your instance open on the topic page because you need to use information from it when you create your flow.

- Open Event Processing in a separate window or tab.

This getting started scenario assumes that there is an order details available through a Kafka topic, and the topic is discoverable in Event Endpoint Management. For example, in this scenario, the clothing company have a topic in their Event Endpoint Management catalog called ORDERS.NEW. This topic emits events for every new order that is made.

Step 1: Create a flow

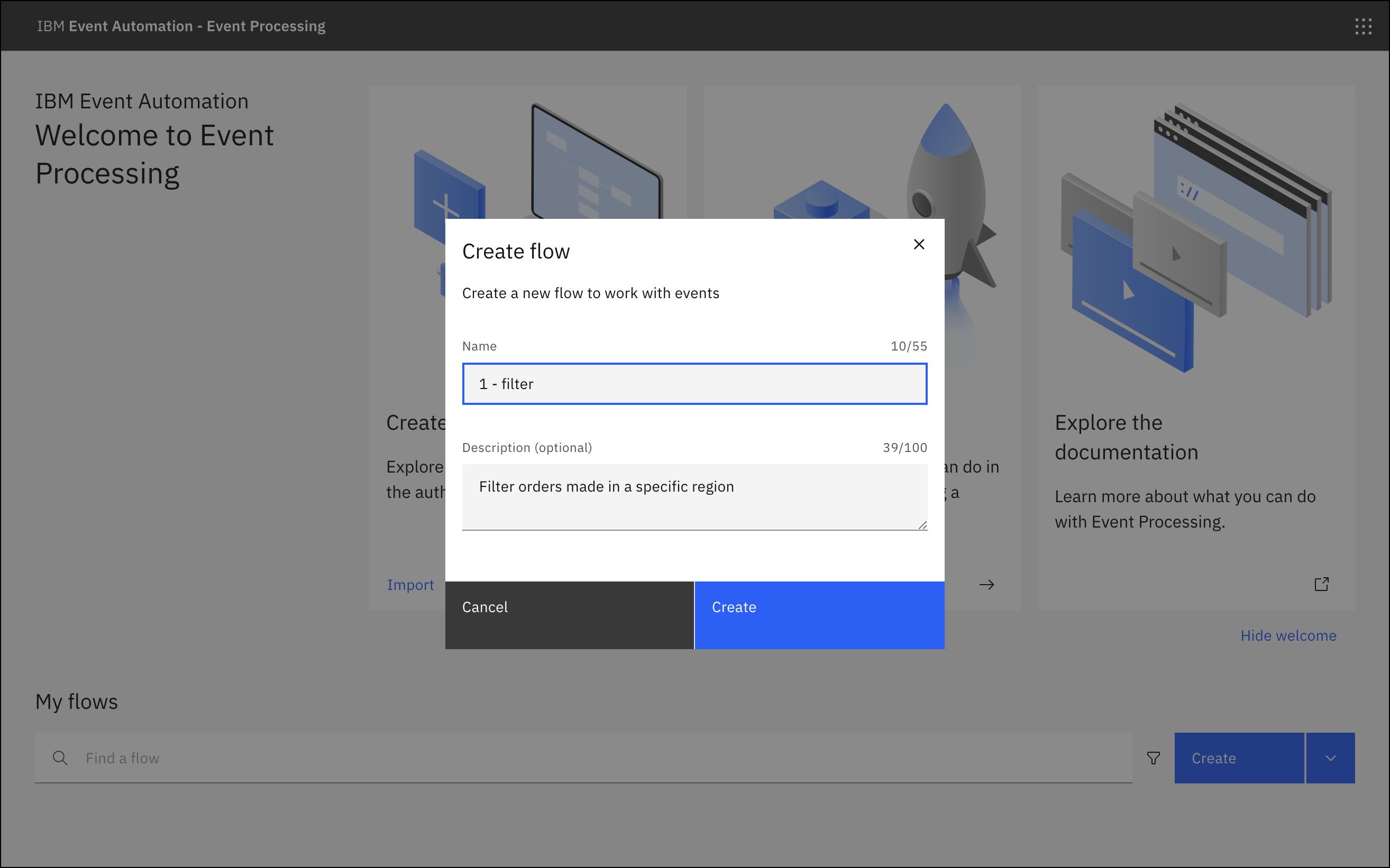

- On the Event Processing home page, click Create.

- Provide a name and optionally a description for your flow.

-

Click Create. The canvas is displayed with an event source node on it.

Note: When you create a flow, an event source node is automatically added to your canvas. A purple checkbox

is displayed on the event source node indicating that the node is yet to be configured.

- To configure an event source, hover over the source node, and click

Edit. The Configure event source window is displayed.

The clothing company created a flow called Filter and provided a description to explain that this flow will be used to identify orders made in a specific region.

Save

User actions are saved automatically. For save status updates, see the canvas header.

- Saving

indicates that saving is in progress.

indicates that saving is in progress. - Saved

confirms success.

confirms success. - Failed

indicates that there are errors. If an action fails to save automatically, you receive a notification to try the save again. Click Retry to re-attempt the save. When a valid flow is saved, you can proceed to run the job.

indicates that there are errors. If an action fails to save automatically, you receive a notification to try the save again. Click Retry to re-attempt the save. When a valid flow is saved, you can proceed to run the job. - Stale

indicates that another user modified the flow. A pop-up window is displayed and depending on the Stale status you are prompted to select one of the following actions:

indicates that another user modified the flow. A pop-up window is displayed and depending on the Stale status you are prompted to select one of the following actions:

- Save as a copy: Select this action to save the current flow as a new one without incorporating the changes made by the other user. The new flow is called ‘Copy of

<flow-name>’. - Accept changes: Select this action to apply the latest updates that are made by the other user to the flow. For the Flow running case, you can view the running flow.

- Home: Select this action to navigate back to the home page. The specific flow will no longer be available because it was deleted by another user.

- Save as a copy: Select this action to save the current flow as a new one without incorporating the changes made by the other user. The new flow is called ‘Copy of

Step 2: Configure an event source

- You need to provide the source of events that you want to process. To do this, start by adding an event source, select Add new event source > Next.

- In the Details section, provide a name for the node.

-

In the Connect to Kafka cluster section, provide the server address of the Kafka cluster that you want to connect to. You can get the server address for the event source from your cluster administrator.

Note: To add more addresses, click Add URL + and enter the server address.

- Click Next. The Access credentials pane is displayed.

- Provide the credentials that are required to access your Kafka cluster and topic. You can generate access credentials for accessing a stream of events from the Event Endpoint Management page. For more information, see subscribing to topics.

- Click Next. The Topic selection pane is displayed.

- Use the radio buttons to confirm the name of the topic that you want to process events from.

- Click Next. The Define event structure pane is displayed.

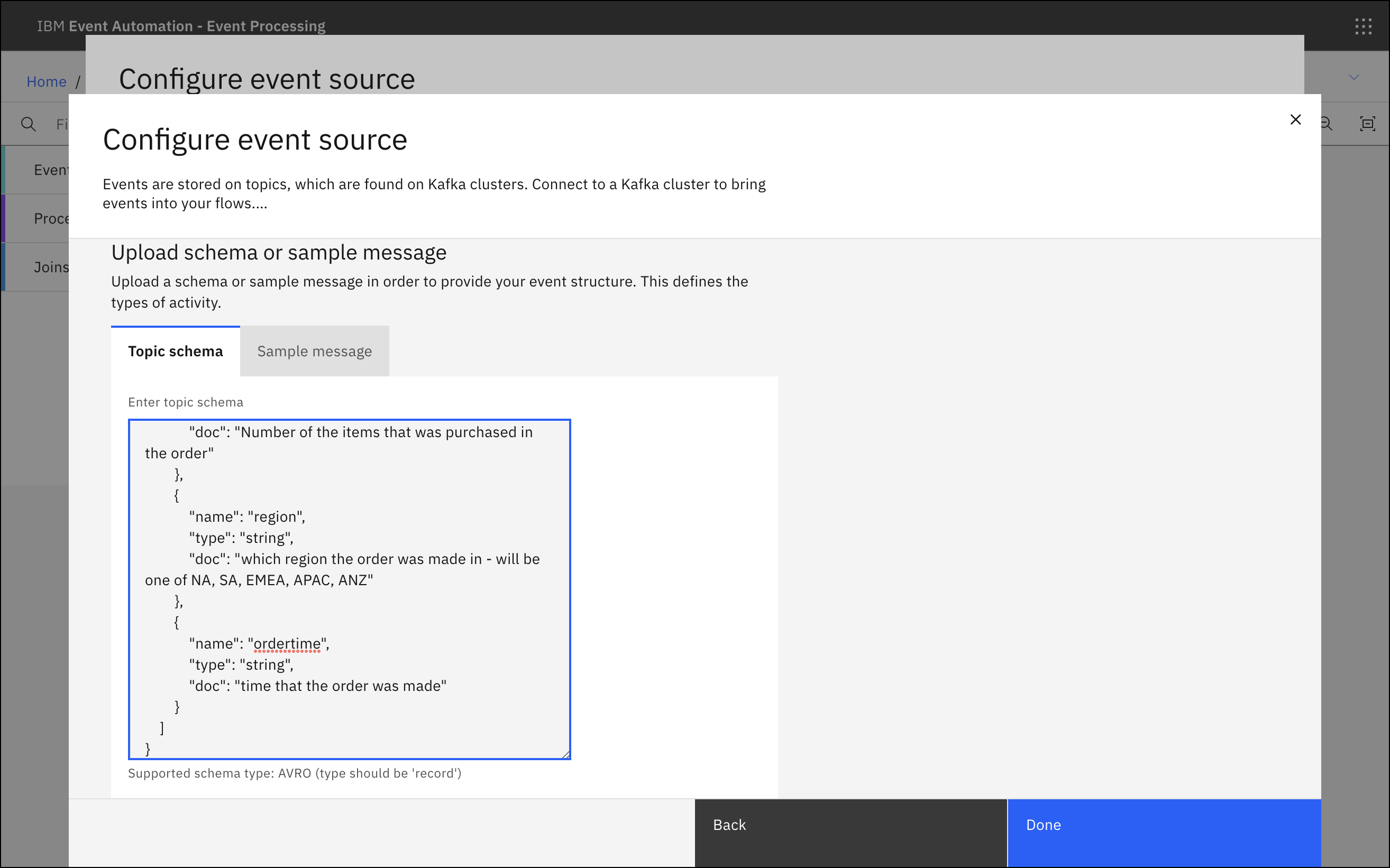

-

Provide a schema or sample message available from the topic. To do this, click Upload a schema or sample message + and paste a valid schema into the Topic schema or the Sample message tab.

Enter an Avro schema in the Topic schema tab, or click the Sample message tab and enter the sample message in JSON format. For more information, see event information.

- Set an event time and leave the event source to be saved for later reuse. Saving the connection details makes creating similar event sources a lot quicker because there is no need to enter the same details again.

- Click Configure. The canvas is displayed and your event source node has a green checkmark, which indicates that the node has been configured successfully.

The clothing company called their event source Orders and used the schema for their Order events topic in Event Endpoint Management to update the topic schema tab in Event Processing.

Step 3: Add a filter

- On the Palette, in the Processors section, drag a Filter node onto the canvas.

- Drag the output port on the event node to the input port on the filter to join the two nodes together.

- Hover over the source node and click

Edit.

The Configure filter window is displayed.

Step 4: Configure the filter

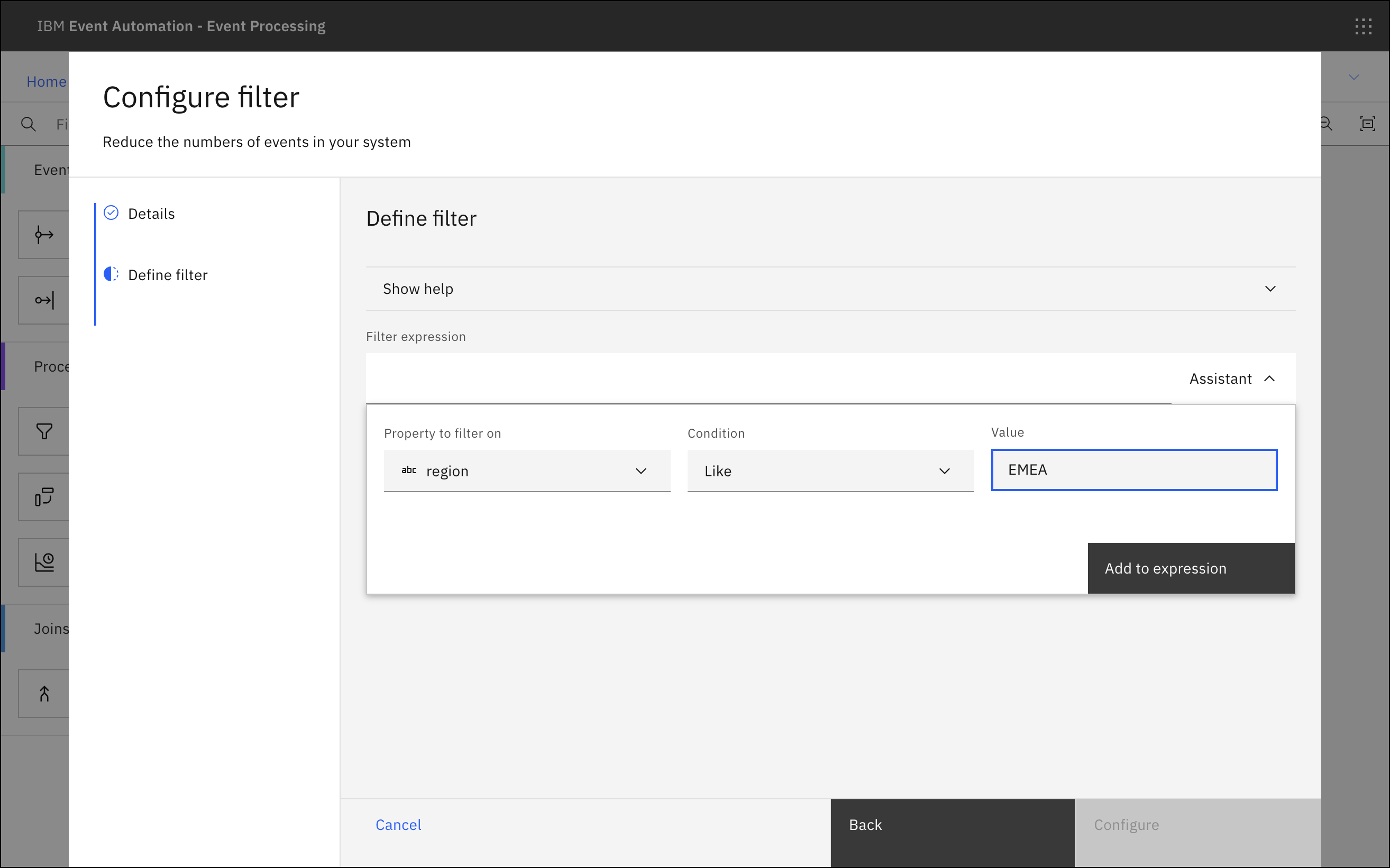

- Now, you need to configure the filter that defines the events that you are interested in. To do this, in the Details section, provide a name for the filter node.

- Click Next. The Define filter section is displayed.

- Use the Assistant to define a filter with your requirements by updating the Property to filter on, Condition, and Value fields.

- Click Add to expression.

- Click Configure. The canvas is displayed and your Filter node has a green checkmark, which indicates that the node has been configured successfully.

The clothing company called their filter EMEA orders and defined a filter that matches events with a region value of EMEA.

Step 5: Run the flow

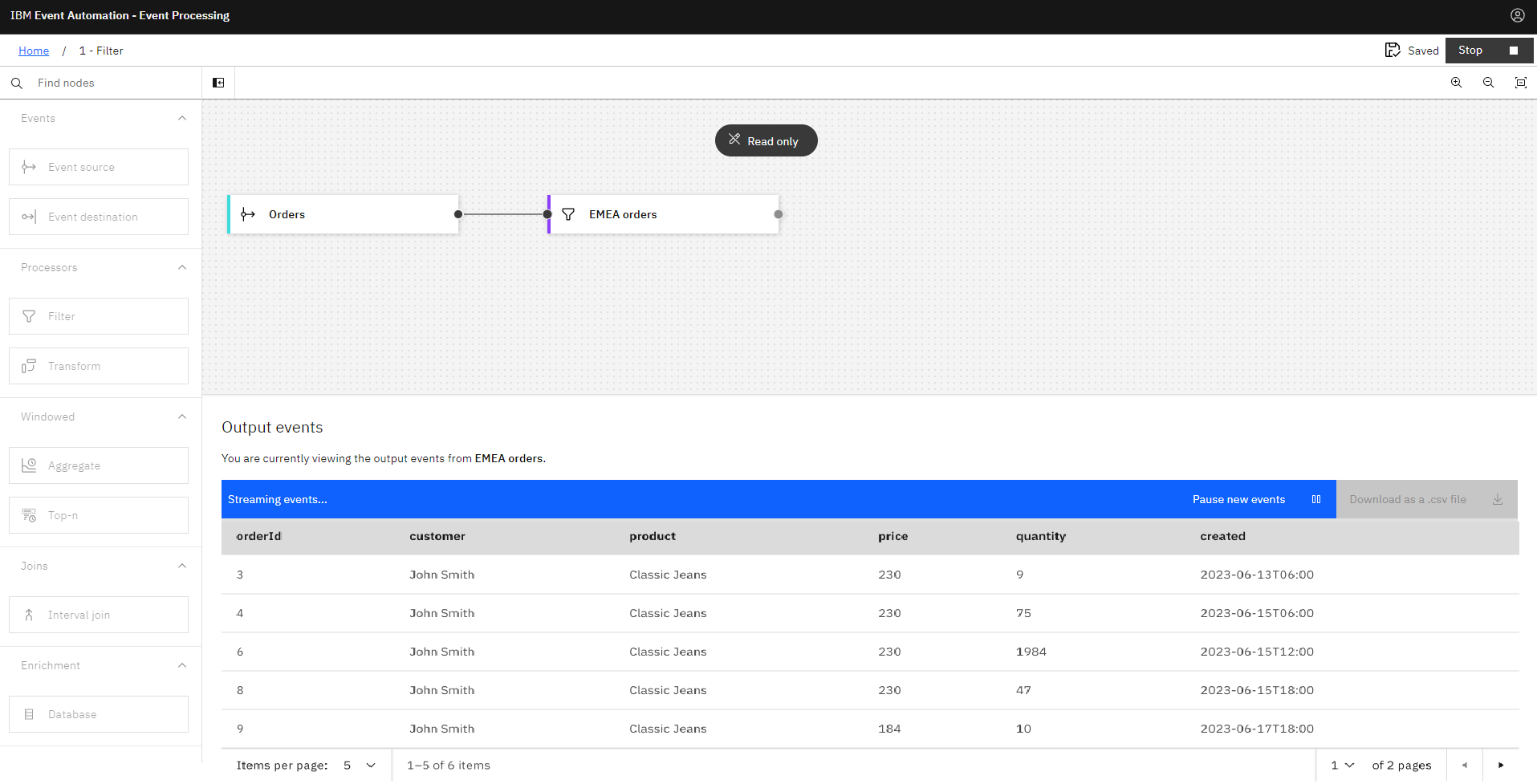

- The last step is to run your Event Processing flow and view the results.

- In the navigation banner, expand Run and select either Events from now or Include historical to run your flow.

A live view of results from your running flow automatically opens. The results view is showing the output from your flow - the result of processing any events that have been produced to your chosen Event Endpoint Management topic.

Tip: Include historical is useful while you are developing your flows because you don’t need to wait for new events to be produced to the topic. You can use all the events already on the Kafka topic to check that your flow is working the way that you want.

In the navigation banner, click Stop to stop the flow when you finish reviewing the results.

The clothing company selected Include historical to run the filter on the history of order events available on their Order events topic. All the orders from the EMEA region are displayed. This provides the company real-time information about orders placed in the region, and helps them review orders as they occur.

Flow statuses

A flow status indicates the current state of the flow. A flow can be in one of the following states:

- Draft: Indicates that the flow includes one or more nodes that need to be configured. The flow cannot be run.

Valid: Indicates that all nodes in the flow are configured and valid. The flow is ready to run.

Invalid: Indicates that the nodes in the flow are configured but have a validation error, or a required node is missing. The flow cannot be run.

- Running: Indicates that the flow is configured, validated, running, and generating output.

- Error: Indicates that an error occurred during the runtime of a previously running flow.