Upgrade your Event Processing deployment as follows. Review the upgrade procedure and decide the right steps to take for your deployment based on your platform and the version level you are upgrading to.

Upgrade paths

You can upgrade Event Processing to the latest 1.1.x version directly from 1.1.0 or any 1.0.x version by using operator version 1.1.x. The upgrade procedure depends on whether you are upgrading to a major, minor, or patch level version, and what your catalog source is.

-

On OpenShift, you can upgrade Event Processing and the IBM Operator for Apache Flink to the latest 1.1.x version by using operator channel v1.1. Review the general upgrade prerequisites before following the instructions to upgrade on OpenShift.

-

On other Kubernetes platforms, you must update the Helm repository for any level version update (any digit update: major, minor, or patch), and then upgrade by using the Helm chart. Review the general upgrade prerequisites before following the instructions to upgrade on other Kubernetes platforms.

Prerequisites

-

Ensure you have a supported version of the OpenShift Container Platform installed. For supported versions, see the support matrix.

-

If you are upgrading from Event Processing version 1.1.2 or earlier, fix any flows that are in an Error state, or delete any such flows if no longer required.

-

To upgrade without data loss, your Event Processing and IBM Operator for Apache Flink instances must have persistent storage enabled. If you upgrade instances which use ephemeral storage, all data will be lost.

-

If your Flink instance is an application cluster for deploying advanced flows in production environments, the automatic upgrade cannot update the custom Flink image built by extending the IBM-provided Flink image. In this case, after the successful upgrade of the operator, complete steps 1a, 1b, 1e, and 2c in build and deploy a Flink SQL runner to make use of the upgraded Flink image.

Important: You will experience some downtime during the Event Processing upgrade while the pods for the relevant components are recycled.

Upgrading on the OpenShift Container Platform

You can upgrade your Event Processing and Flink instances running on the OpenShift Container Platform by using the CLI or the web console.

Planning your upgrade

Complete the following steps to plan your upgrade on OpenShift.

-

Determine which Operator Lifecycle Manager (OLM) channel is used by your existing Subscription. You can check the channel you are subscribed to in the web console (see Update channel section), or by using the CLI as follows (this is the subscription created during installation):

-

Run the following command to check your subscription details:

oc get subscription -

Check the

CHANNELcolumn for the channel you are subscribed to, for example, v1.0 in the following snippet:NAME PACKAGE SOURCE CHANNEL ibm-eventautomation-flink ibm-eventautomation-flink ea-flink-operator-catalog v1.0 ibm-eventprocessing ibm-eventprocessing ea-sp-operator-catalog v1.0

-

- If your existing Subscription does not use the v1.1 channel, your upgrade is a change in a minor version. Complete the following steps to upgrade:

- Stop your flows.

- Ensure the catalog source for new version is available.

- Change your Subscription to the

v1.1channel by using the CLI or the web console. The channel change will upgrade your Event Processing and IBM Operator for Apache Flink operators, and your Event Processing and Flink instances are then also automatically upgraded. - Restart your flows after the upgrade.

-

If your existing Subscription is already on the v1.1 channel, your upgrade is a change to the patch level (third digit) only. Make the catalog source for your new version available to upgrade to the latest level. If you installed by using the IBM Operator Catalog with the

latestlabel, new versions are automatically available.The Event Processing and IBM Operator for Apache Flink operators will be automatically upgrade to the latest 1.1.x when they are available in the catalog, and your Event Processing and Flink instances are then also automatically upgraded.

Stopping flows for major and minor upgrades

If you are upgrading to a new major or minor release (first and second digit change), stop the flows before upgrading. This is not required for patch level (third digit) upgrades.

Stop all the running flows in the Event Processing UI as follows to avoid errors:

- Log in to your Event Processing UI.

- In the flow that you want to stop, select View flow.

- In the navigation banner, click Stop to stop the flow.

Note: When upgrading to a new major or minor version (such as upgrading from v1.0 to v1.1), ensure you upgrade both the Event Processing and the IBM Operator for Apache Flink operator, then complete the post-upgrade tasks, before using your flows again.

Making new catalog source available

Before you can upgrade to the latest version, the catalog source for the new version must be available on your cluster. Whether you have to take action depends on how you set up version control for your deployment.

-

Latest versions: If your catalog source is the IBM Operator Catalog, latest versions are always available when published, and you do not have to make new catalog sources available.

-

Specific versions: If you applied a catalog source for a specific version to control the version of the operator and instances that are installed, you must apply the new catalog source you want to upgrade to.

Upgrading Subscription by using the CLI

If you are using the OpenShift command-line interface (CLI), the oc command, complete the steps in the following sections to upgrade your Event Processing and Flink installations.

For Event Processing:

- Log in to your Red Hat OpenShift Container Platform as a cluster administrator by using the

ocCLI (oc login). -

Ensure the required Event Processing Operator Upgrade Channel is available:

oc get packagemanifest ibm-eventprocessing -o=jsonpath='{.status.channels[*].name}' -

Change the subscription to move to the required update channel, where

vX.Yis the required update channel (for example,v1.1):oc patch subscription -n <namespace> ibm-eventprocessing --patch '{"spec":{"channel":"vX.Y"}}' --type=merge

For Flink:

- Log in to your Red Hat OpenShift Container Platform as a cluster administrator by using the

ocCLI (oc login). -

Ensure the required IBM Operator for Apache Flink Operator Upgrade Channel is available:

oc get packagemanifest ibm-eventautomation-flink -o=jsonpath='{.status.channels[*].name}' -

Change the subscription to move to the required update channel, where

vX.Yis the required update channel (for example,v1.1):oc patch subscription -n <namespace> ibm-eventautomation-flink --patch '{"spec":{"channel":"vX.Y"}}' --type=merge - If your Flink instance is an application cluster for deploying advanced flows in production environments, complete steps 1a, 1b, 1e, and 2c in build and deploy a Flink SQL runner to make use of the upgraded Flink image.

All Event Processing and Flink pods that need to be updated as part of the upgrade will be rolled.

Upgrading Subscription by using the web console

If you are using the OpenShift Container Platform web console, complete the steps in the following sections to upgrade your Event Processing installation.

For Event Processing:

- Log in to the OpenShift Container Platform web console using your login credentials.

-

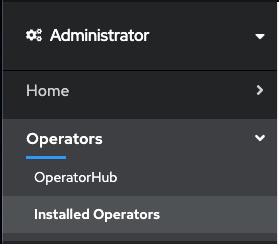

Expand Operators in the navigation on the left, and click Installed Operators.

- From the Project list, select the namespace (project) the instance is installed in.

- Locate the operator that manages your Event Processing instance in the namespace. It is called Event Processing in the Name column. Click the Event Processing in the row.

- Click the Subscription tab to display the Subscription details for the Event Processing operator.

- Select the version number in the Update channel section (for example, v1.0). The Change Subscription update channel dialog is displayed, showing the channels that are available to upgrade to.

- Select the required channel, for example v1.1, and click the Save button on the Change Subscription update channel dialog.

All Event Processing pods that need to be updated as part of the upgrade will be rolled.

For Flink:

- Log in to the OpenShift Container Platform web console using your login credentials.

- Expand Operators in the navigation on the left, and click Installed Operators.

- From the Project list, select the namespace (project) the instance is installed in.

- Locate the operator that manages your Flink instance in the namespace. It is called IBM Operator for Apache Flink in the Name column. Click the IBM Operator for Apache Flink in the row.

- Click the Subscription tab to display the Subscription details for the Event Processing operator.

- Select the version number in the Update channel section (for example, v1.1). The Change Subscription update channel dialog is displayed, showing the channels that are available to upgrade to.

- Select the required channel, for example v1.1, and click the Save button on the Change Subscription update channel dialog.

- If your Flink instance is an application cluster for deploying advanced flows in production environments, complete steps 1a, 1b, 1e, and 2c in build and deploy a Flink SQL runner to make use of the upgraded Flink image.

All Flink pods that need to be updated as part of the upgrade will be rolled.

Upgrading on other Kubernetes platforms by using Helm

If you are running Event Processing on Kubernetes platforms that support the Red Hat Universal Base Images (UBI) containers, you can upgrade Event Processing by using the Helm chart.

Planning your upgrade

Complete the following steps to plan your upgrade on other Kubernetes platforms.

-

Determine the chart version for your existing deployment:

-

Change to the namespace where your Event Processing instance is installed:

kubectl config set-context --current --namespace=<namespace> -

Run the following command to check what version is installed:

helm list -

Check the version installed in the

CHARTcolumn, for example,<chart-name>-1.0.5in the following snippet:NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ibm-eventautomation-flink ep 1 2023-11-20 11:49:52.565373278 +0000 UTC deployed ibm-eventautomation-flink-1.0.5 9963366-651feed ibm-eventprocessing ep 1 2023-11-20 11:49:27.221411789 +0000 UTC deployed ibm-eventprocessing-1.0.5 9963366-651feed

-

-

Check the latest chart version that you can upgrade to:

- Log in to your Kubernetes cluster as a cluster administrator by setting your

kubectlcontext. -

Add the IBM Helm repository:

helm repo add ibm-helm https://raw.githubusercontent.com/IBM/charts/master/repo/ibm-helm -

Update the Helm repository:

helm repo update ibm-helm -

Check the version of the chart you will be upgrading to is the intended version:

helm show chart ibm-helm/ibm-eventautomation-flink-operatorCheck the

version:value in the output, for example:version: 1.1.1

- Log in to your Kubernetes cluster as a cluster administrator by setting your

- If the chart version for your existing deployment is 1.0.x, your upgrade is a change in a minor version. Complete the following steps to upgrade:

- Stop your flows before the upgrade (this is the same process as on the OpenShift platform).

- Follow steps in Upgrading using Helm to update your Custom Resource Definitions (CRDs) and operator charts to the latest version. The operators will then upgrade your instances automatically.

- Restart your flows after the upgrade.

- If your existing chart version is 1.1.x, your upgrade is a change in the patch level version only, and you only need to follow the steps in upgrading by using Helm to update your Custom Resource Definitions (CRDs) and operator charts to the latest version. The operators will then upgrade your instances automatically.

Upgrading by using Helm

You can upgrade your Event Processing and Flink instances running on other Kubernetes platforms by using Helm.

- Log in to your Kubernetes cluster as a cluster administrator by setting your

kubectlcontext. - Ensure you are running in the namespace containing the Helm release of the IBM Operator for Apache Flink CRDs.

-

Run the following command to upgrade the Helm release that manages your Flink CRDs:

helm upgrade <flink_crd_release_name> ibm-helm/ibm-eventautomation-flink-operator-crd -

Upgrade the Helm release of IBM Operator for Apache Flink. Switch to the correct namespace if your CRD Helm release is in a different namespace to your operator and then run:

helm upgrade <flink_release_name> ibm-helm/ibm-eventautomation-flink-operator <install_flags>Where:

<flink_crd_release_name>is the Helm release name of the Helm installation that manages the Flink CRDs.<flink_release_name>is the Helm release name of the Flink operator.<install_flags>are any optional installation property settings such as--set watchAnyNamespace=true.

-

Identify the namespace where the Event Processing installation is located and the Helm release managing the CRDs by looking at the annotations on the CRDs:

kubectl get crd eventprocessings.events.ibm.com -o jsonpath='{.metadata.annotations}'The following is an example output showing CRDs managed by Helm release

ep-crdsin namespacemy-ep:{"meta.helm.sh/release-name": "ep-crds", "meta.helm.sh/release-namespace": "ep"} -

Ensure you are using the namespace where your Event Processing CRD Helm release is located (see previous step):

kubectl config set-context --current --namespace=<namespace> -

Run the following command to upgrade the Helm release that manages your Event Processing CRDs:

helm upgrade <crd_release_name> ibm-helm/ibm-ep-operator-crd -

Run the following command to upgrade the Helm release of your operator installation. Switch to the right namespace if your CRD Helm release is in a different namespace to your operator.

helm upgrade <ep_release_name> ibm-helm/ibm-ep-operator <install_flags>

Where:

<crd_release_name>is the Helm release name of the Helm installation that manages the Event Processing CRDs.<ep_release_name>is the Helm release name of the Event Processing operator.<install_flags>are any optional installation property settings such as--set watchAnyNamespace=true.

Note: After you completed the previous steps, you can restore your Flink instances as follows:

-

If your Flink instance is a session cluster and you disabled the webhook of the Flink operator (

--set webhook.create=false), you must update thespec.flinkVersionandspec.imagefields of yourFlinkDeploymentcustom resource to match the new values of theIBM_FLINK_IMAGEandIBM_FLINK_VERSIONenvironment variables on the Flink operator pod. -

If your Flink instance is an application cluster for deploying advanced flows in production environments, complete steps 1a, 1b, 1e, and 2c in build and deploy a Flink SQL runner to make use of the upgraded Flink image.

Post-upgrade tasks

Verifying the upgrade

After the upgrade, verify the status of the Event Processing and Flink instances, by using the CLI or the UI.

Restart your flows

This only applies if you stopped flows before upgrade. After the successful upgrade of both the Event Processing and the Flink operators, you can restart the flows that you stopped earlier.

- Log in to your Event Processing UI.

- In the flow that you want to run, select Edit flow.

- In the navigation banner, expand Run, and select either Events from now or Include historical to run your flow.

Securing communication with Flink deployments

To secure your communication between Event Processing and Flink deployments, complete the following steps:

- Stop any Event Processing flows that are currently running.

- Create the required JKS secrets for the Flink operator, the Flink instances, and optionally for Event Processing.

-

Ensure that the required certificates are mounted in your operator by running the command:

kubectl exec -it <flink-operator-pod> -- ls /opt/flink/tls-certThe required

truststore.jksfile is listed in the output.Note: If the

truststore.jksfile is not present, restart the Flink operator pod by deleting it:kubectl delete pod <pod-name> -n <namespace> -

Add your SSL configuration to the

spec.flinkConfigurationsection in theFlinkDeploymentcustom resource. For example:spec: flinkConfiguration: security.ssl.enabled: 'true' security.ssl.truststore: /opt/flink/tls-cert/truststore.jks security.ssl.truststore-password: <jks-password> security.ssl.keystore: /opt/flink/tls-cert/keystore.jks security.ssl.keystore-password: <jks-password> security.ssl.key-password: <jks-password> kubernetes.secrets: '<jks-secret>:/opt/flink/tls-cert'Where

<jks-secret>is the secret containing the keystores and truststores for your deployment, and<jks-password>is the password for those stores. - Wait for your

FlinkDeploymentpods to restart. -

Add the relevant Flink TLS configuration to the

spec.flink.tlssection in theEventProcessingcustom resource. For example:spec: flink: tls: secretKeyRef: key: <key-containing-password-value> name: <flink-jks-password-secret> secretName: <flink-jks-secret> - Wait for the

EventProcessingpod to restart. - Restart your Event Processing flows.