Consider the following when planning your installation.

Performance considerations

When preparing for your Event Streams installation, review your workload requirements and consider the configuration options available for performance tuning both your IBM Cloud Private and Event Streams installations. For more information, see the performance planning topic.

Kafka high availability

Kafka is designed for high availability and fault tolerance.

To reduce the impact of Event Streams Kafka broker failures, spread your brokers across several IBM Cloud Private worker nodes by ensuring you have at least as many worker nodes as brokers. For example, for 3 Kafka brokers, ensure you have at least 3 worker nodes running on separate physical servers.

Kafka ensures that topic-partition replicas are spread across available brokers up to the replication factor specified. Usually, all of the replicas will be in-sync, meaning that they are all fully up-to-date, although some replicas can temporarily be out-of-sync, for example, when a broker has just been restarted.

The replication factor controls how many replicas there are, and the minimum in-sync configuration controls how many of the replicas need to be in-sync for applications to produce and consume messages with no loss of function. For example, a typical configuration has a replication factor of 3 and minimum in-sync replicas set to 2. This configuration can tolerate 1 out-of-sync replica, or 1 worker node or broker outage with no loss of function, and 2 out-of-sync replicas, or 2 worker node or broker outages with loss of function but no loss of data.

The combination of brokers spread across nodes together with the replication feature make a single Event Streams cluster highly available.

Multizone support

To add further resilience to your clusters, you can also split your servers across multiple data centers or zones, so that even if one zone experiences a failure, you still have a working system.

Multizone support provides the option to run a single Kubernetes cluster in multiple availability zones within the same region. Multizone clusters are clusters of either physical or virtual servers that are spread over different locations to achieve greater resiliency. If one location is shut down for any reason, the rest of the cluster is unaffected.

Note: For Event Streams to work effectively within a multizone cluster, the network latency between zones must not be greater than 20 ms for Kafka to replicate data to the other brokers.

Your Kubernetes container platforms can be zone aware or non-zone aware:

- Zone-aware platforms set up your clusters in multiple availability zones automatically by allocating your application resources evenly across the zones.

- Non-zone-aware platforms require you to manually prepare your clusters for multiple zones by specifying labels for nodes, and then providing the labels for the zones during the installation of Event Streams.

For information about how to determine whether your clusters are zone aware or not, and to get ready for installing into multiple zones, see preparing for multizone clusters.

Persistent storage

Persistence is not enabled by default, so no persistent volumes are required. Enable persistence if you want your data, such as messages in topics, schemas, and configuration settings to be retained in the event of a restart. You should enable persistence for production use and whenever you want your data to survive a restart.

If you plan to have persistent volumes, consider the disk space required for storage.

Also, as both Kafka and ZooKeeper rely on fast write access to disks, ensure you use separate dedicated disks for storing Kafka and ZooKeeper data. For more information, see the disks and filesystems guidance in the Kafka documentation, and the deployment guidance in the ZooKeeper documentation.

If persistence is enabled, each Kafka broker and ZooKeeper server requires one physical volume each. The number of Kafka brokers and ZooKeeper servers depends on your setup, for default requirements, see the resource requirements table. Schema registry requires a single physical volume. You either need to create a persistent volume for each physical volume, or specify a storage class that supports dynamic provisioning. Each component can use a different storage class to control how physical volumes are allocated.

Note: When creating persistent volumes backed by an NFS file system, ensure the path provided has the access permission set to 775.

See the IBM Cloud Private documentation for information about creating persistent volumes and creating a storage class that supports dynamic provisioning. For both, you must have the IBM Cloud Private Cluster Administrator role.

Important: When creating persistent volumes for each component, ensure the correct Access mode is set for the volumes as described in the following table.

| Component | Access mode |

|---|---|

| Kafka | ReadWriteOnce |

| ZooKeeper | ReadWriteOnce |

| Schema registry | ReadWriteMany or ReadWriteOnce |

More information about persistent volumes and the system administration steps required before installing Event Streams can be found in the Kubernetes documentation.

If these persistent volumes are to be created manually, this must be done by the system administrator before installing Event Streams. The administrator will add these to a central pool before the Helm chart can be installed. The installation will then claim the required number of persistent volumes from this pool.

If these persistent volumes are to be created automatically, run a provisioner for the the storage class you want to use. See the list of file systems for storage supported by Event Streams.

If you want to use persistent storage, enable it when configuring your Event Streams installation, and provide the storage class details as required.

Important: If membership of a specific group is required to access the file system used for persistent volumes, ensure you specify in the File system group ID field the GID of the group that owns the file system.

Using IBM Spectrum Scale

If you are using IBM Spectrum Scale for persistence, see the IBM Storage Enabler for Containers with IBM Spectrum Scale documentation for more information about creating storage classes.

The system administrator must enable support for the automatic creation of persistent volumes prior to installing Event Streams. To do this, enable dynamic provisioning when configuring your installation, and provide the storage class names to define the persistent volumes that get allocated to the deployment.

Note: When creating storage classes for an IBM Spectrum Scale file system, ensure you specify both the UID and GID in the storage class definition. Then, when installing Event Streams, ensure you set the File system group ID field to the GID specified in the storage class definition. Also, ensure that this user exists on all IBM Spectrum Scale and GUI nodes.

Securing communication between pods

You can enhance your security by encrypting the internal communication between Event Streams pods by using TLS. By default, TLS communication between pods is disabled.

You can enable encryption between pods when configuring your Event Streams installation.

You can also enable TLS encryption between pods for existing Event Streams installations.

Important: All message data is encrypted using TLS, but communication between the geo-replicator and administration server pods is not encrypted (see tables in resource requirements).

ConfigMap for Kafka static configuration

You can choose to create a ConfigMap to specify Kafka configuration settings for your Event Streams installation. This is optional.

You can use a ConfigMap to override default Kafka configuration settings when installing Event Streams.

You can also use a ConfigMap to modify read-only Kafka broker settings for an existing Event Streams installation. Read-only parameters are defined by Kafka as settings that require a broker restart. Find out more about the Kafka configuration options and how to modify them for an existing installation.

To create a ConfigMap:

- Log in to your cluster as an administrator by using the IBM Cloud Private CLI:

cloudctl login -a https://<cluster-address>:<cluster-router-https-port>

Note: To create a ConfigMap, you must have the Team Operator, Team Administrator, or Cluster Administrator role in IBM Cloud Private. - To create a ConfigMap from an existing Kafka

server.propertiesfile, use the following command (where namespace is where you install Event Streams):

kubectl -n <namespace_name> create configmap <configmap_name> --from-env-file=<full_path/server.properties> - To create a blank ConfigMap for future configuration updates, use the following command:

kubectl -n <namespace_name> create configmap <configmap_name>

Geo-replication

You can deploy multiple instances of Event Streams and use the included geo-replication feature to synchronize data between your clusters. Geo-replication helps maintain service availability.

Find out more about geo-replication.

Prepare your destination cluster by setting the number of geo-replication worker nodes during installation.

Connecting clients

By default, Kafka client applications connect to the IBM Cloud Private master node directly without any configuration required. If you want clients to connect through a different route, specify the target endpoint host name or IP address when configuring your installation.

Logging

IBM Cloud Private uses the Elastic Stack for managing logs (Elasticsearch, Logstash, and Kibana products). Event Streams logs are written to stdout and are picked up by the default Elastic Stack setup.

Consider setting up the IBM Cloud Private logging for your environment to help resolve problems with your deployment and aid general troubleshooting. See the IBM Cloud Private documentation about logging for information about the built-in Elastic Stack.

As part of setting up the IBM Cloud Private logging for Event Streams, ensure you consider the following:

- Capacity planning guidance for logging: set up your system to have sufficient resources towards the capture, storage, and management of logs.

- Log retention: The logs captured using the Elastic Stack persist during restarts. However, logs older than a day are deleted at midnight by default to prevent log data from filling up available storage space. Consider changing the log data retention in line with your capacity planning. Longer retention of logs provides access to older data that might help troubleshoot problems.

You can use log data to investigate any problems affecting your system health.

Monitoring Kafka clusters

Event Streams uses the IBM Cloud Private monitoring service to provide you with information about the health of your Event Streams Kafka clusters. You can view data for the last 1 hour, 1 day, 1 week, or 1 month in the metrics charts.

Important: By default, the metrics data used to provide monitoring information is only stored for a day. Modify the time period for metric retention to be able to view monitoring data for longer time periods, such as 1 week or 1 month.

For more information about keeping an eye on the health of your Kafka cluster, see the monitoring Kafka topic.

Licensing

You require a license to use Event Streams. Licensing is based on a Virtual Processing Cores (VPC) metric.

An Event Streams deployment consists of a number of different types of containers, as described in the components of the Helm chart. To use Event Streams you must have a license for all of the virtual cores that are available to all Kafka and Geo-replicator containers deployed. All other container types are pre-requisite components that are supported as part of Event Streams, and do not require additional licenses.

The number of virtual cores available to each Kafka and geo-replicator container can be specified during installation or modified later.

To check the number of cores, use the IBM Cloud Private metering report as follows:

- Log in to your IBM Cloud Private cluster management console as an administrator. For more information, see the IBM Cloud Private documentation.

- From the navigation menu, click Platform > Metering.

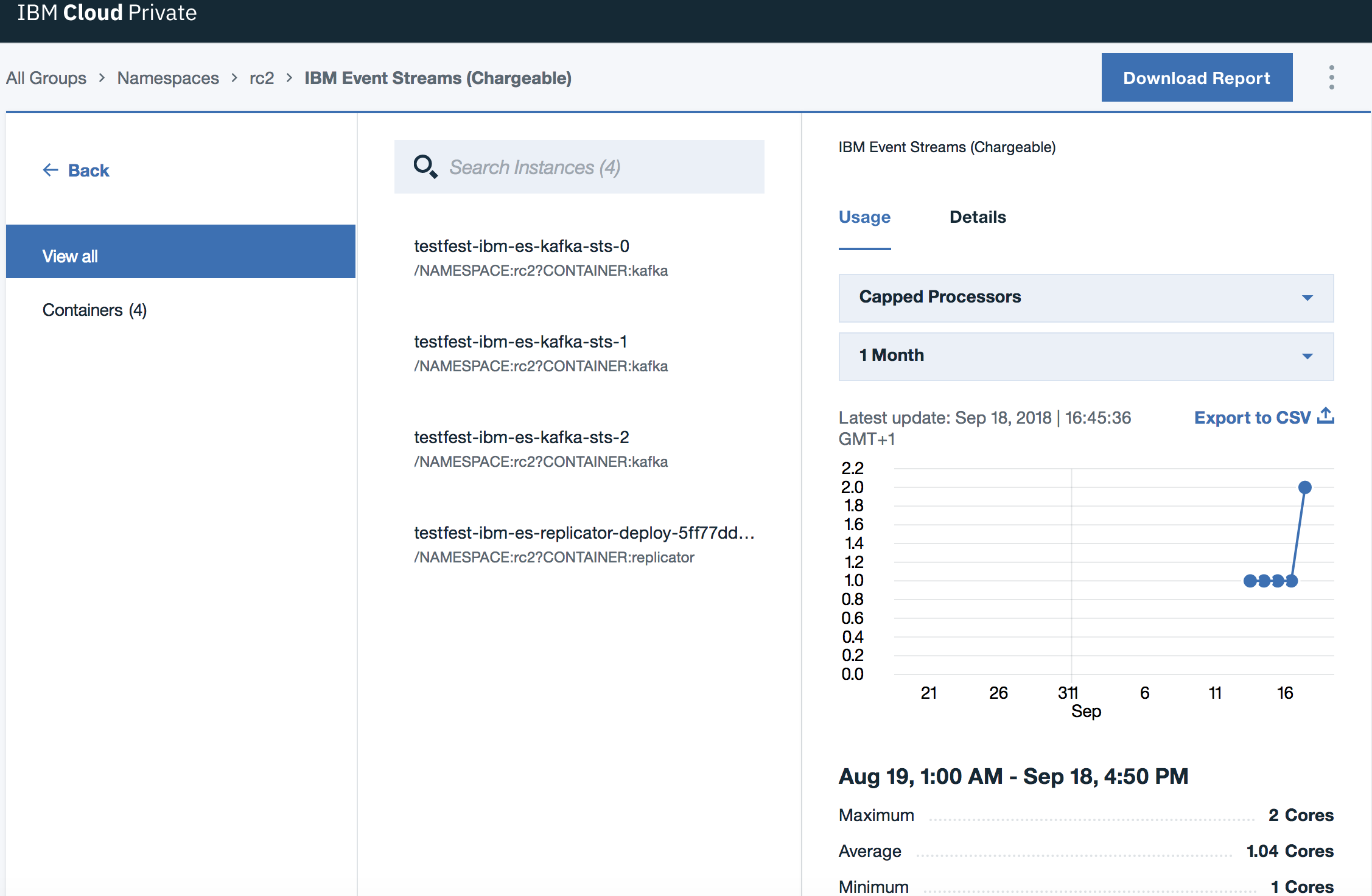

- Select your namespace, and select Event Streams (Chargeable).

- Click Containers.

- Go to the Containers section on the right, and ensure you select the Usage tab.

- Select Capped Processors from the first drop-down list, and select 1 Month from the second drop-down list.

A page similar to the following is displayed:

- Click Download Report, and save the CSV file to a location of your choice.

- Open the downloaded report file.

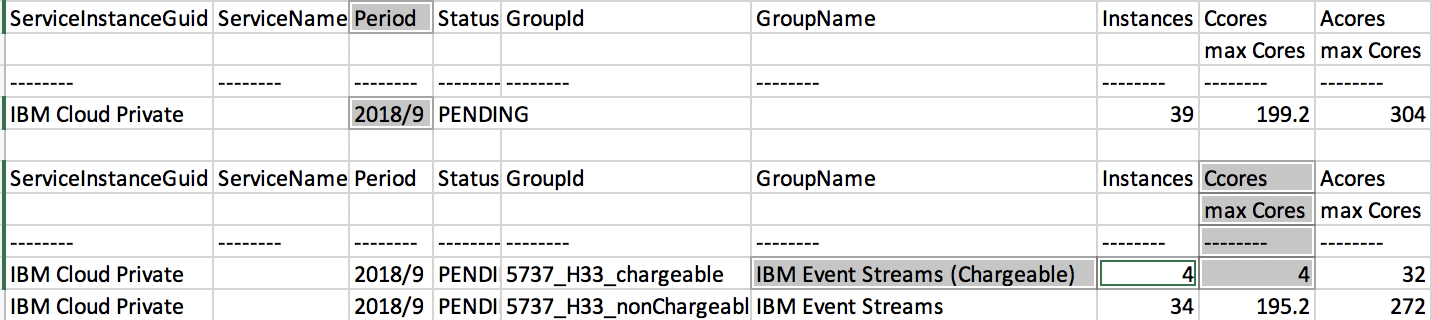

- Look for the month in Period, for example, 2018/9, then in the rows underneath look for Event Streams (Chargeable), and check the CCores/max Cores column. The value is the maximum aggregate number of cores provided to all Kafka and geo-replicator containers. You are charged based on this number.

For example, the following excerpt from a downloaded report shows that for the period 2018/9 the chargeable Event Streams containers had a total of 4 cores available (see the highlighted fields):