As part of planning for a resilient deployment, you can deploy Event Streams on a multizone OpenShift Container Platform cluster to allow for greater resilience against external disruption. For more information and instructions, see how to prepare for a multizone deployment, or see the tutorial. Before following these instructions and deploying Event Streams on a multizone cluster, consider the following information.

Reasons to consider multiple availability zones

Kafka itself offers strong resilience against many failures that could occur within a compute environment. However, Kafka cannot deal with a failure that affects the entire cluster it resides on. In preparation for both planned or unplanned outages, Kafka requires an external redundancy solution or backup to avoid data loss. One solution for this is a Kafka cluster with brokers distributed across multiple availability zones.

An availability zone is an isolated set of computing infrastructure. This includes all aspects of the infrastructure from compute resources to cooling. Data centers are often made up of multiple availability zones. This allows planned outages for maintenance to be done per availability zone, and also helps contain damage from any unplanned outages due to problems within any of the availability zones.

Note: A multizone Kafka cluster offers resilience against failures in a single compute environment, but does not allow for the brokers to be deployed far apart due to latency issues.

Multizone Kafka Cluster

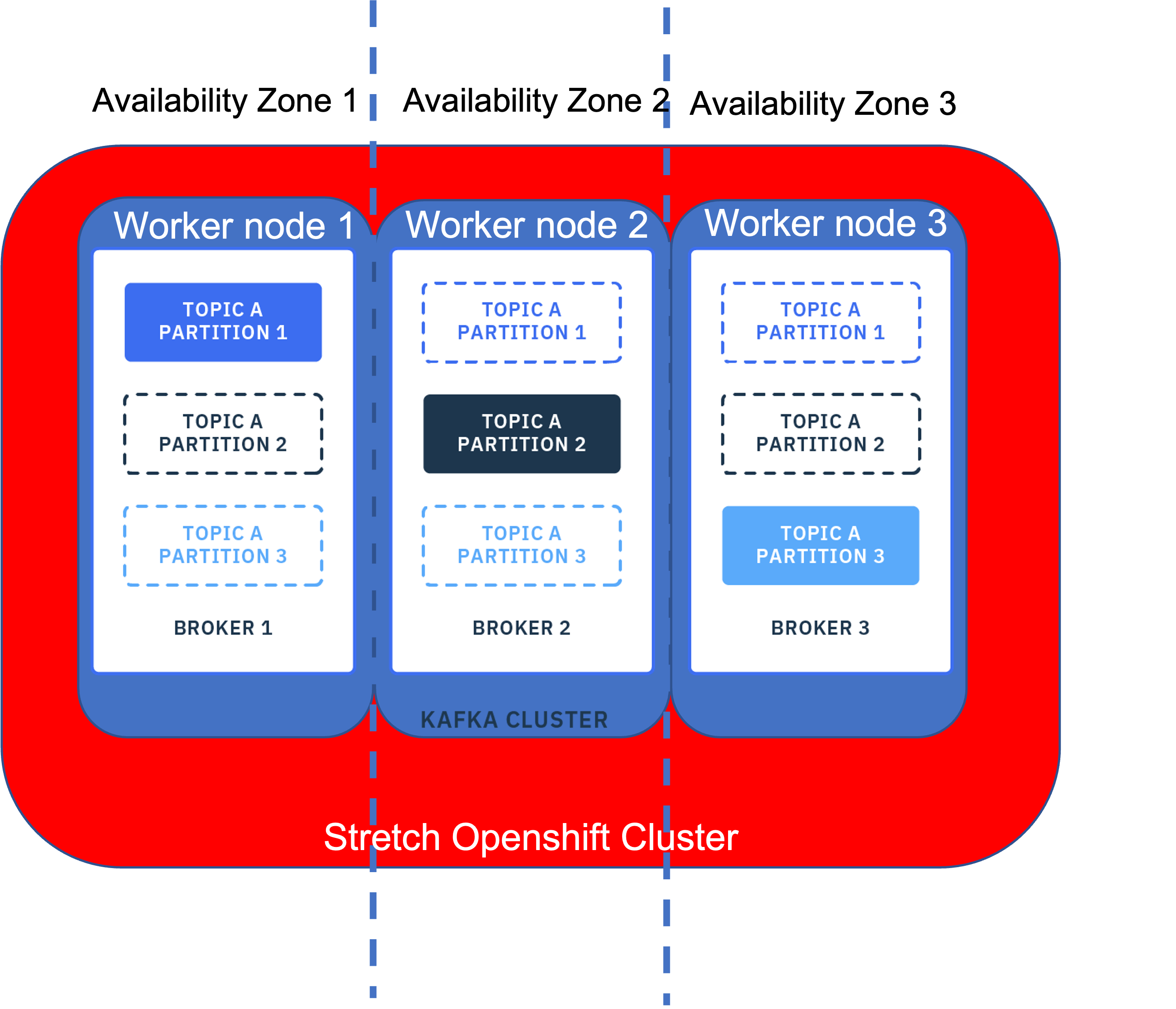

In a multizone Kafka cluster, the brokers are deployed in one virtual compute environment that spans multiple availability zones. This requires the OpenShift Container Platform environment to have nodes that are distributed across multiple availability zones. The Kafka brokers can then be set to run on specific nodes by using Kubernetes topology labels. The following diagram shows the resulting architecture:

Trade-offs in multizone deployments

Latency

For Kafka to function reliably in a stretch OpenShift Container Platform cluster configuration, network latency between the nodes of your Kubernetes cluster must not be greater than 20ms. Low single digit latency values are preferable. The reason is that Kafka brokers and Kafka controllers are deployed within the same cluster nodes and are considered to share the same hardware.

To work around latency issues, you can adjust the replica.lag.time.max.ms option in the Event Streams custom resource, which sets the maximum time in milliseconds for follower replicas to catch up to the leader.

By increasing this value, Kafka can avoid timeouts. However, there is a performance cost to this. Increasing replica.lag.time.max.ms could result in longer time periods during which your replicas might be out of sync.

Replication

In a multizone Kafka cluster, topics, partitions, and replicas need to be carefully managed with respect to managing data redundancy. You can manage this by choosing a suitable value for min.insync.replicas so that in the event of an outage in one availability zone:

- No data is lost.

- The Kafka cluster can still operate, meaning that the number of remaining replicas is greater than or equal to the value of

min.insync.replicas.

For example, the following table provides information about what to set as the value for min.insync.replicas across 3 availability zones :

| Number of Kubernetes nodes | Number of Kafka brokers | Value for min.insync.replicas |

|---|---|---|

| 3 | 3 | 2 |

| 3 | 4 | 3 |

| 3 | 5 | 3 |

| 3 | 6 | 4 |